User:ScotXW/Linux as gaming platform

After reading User:ScotXW/Linux as platform, it is time to ask the question: Is the Linux kernel-based family of operating systems suited as platform for computer gaming?

This is user-space article towards writing a better Linux as a gaming platform-article.

Abstract[edit]

- The good news is that Ogre3D is a rendering engine and we’re only using it as such. The OpenMW code is structured using different subsystems, and this change will, for the most part, affect only the “mwrender” subsystem. Rendering code makes up roughly 8% of our codebase. openscenegraph for OpenMW

- Carmack: there is only very little code, that care about the Linux/Windows/OS X API, that the game is running one. Most of the code, does not interact with the API of the underlying OS!

- http://antongerdelan.net/opengl/shaders.html

- http://www.arcsynthesis.org/gltut/

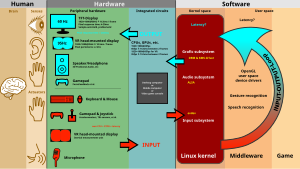

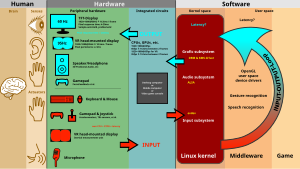

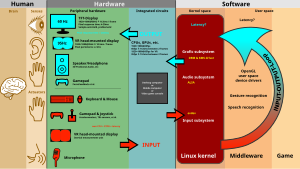

Developing video games for Linux[edit]

- Linux API

- windowing system: Wayland + XWayland

- abstraction APIs for the desktop:

- libinput for input events (to be used by Wayland compositors)

- libcanberra for outputting event sounds (available on top or libALSA (aka libasound) as well as on top of PulseAudio!)

- PulseAudio – prevailing sound server for desktop use

- abstraction APIs for video game development:

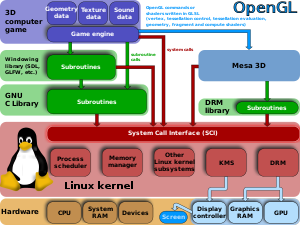

- in Mesa 3D/AMD Catalyst/Nvidia GeForce driver: OpenGL / OpenGL ES / etc., in

- in Simple DirectMedia Layer/Simple and Fast Multimedia Library/OpenAL/etc. input and audio APIs (which are available cross-platform and not exclusive to Linux!)

- abstraction APIs for professional audio production:

- JACK Audio Connection Kit – prevailing sound server for professional audio production

- LADSPA & LV2 – prevailing APIs for plugins

Distributing video games for Linux[edit]

- compile against Linux ABI

- package

- package RPM or DEB and distribute over package management system using your own software repository (monetize how?)

- package for and distribute over Steam

- Ubuntu Software Center

- other?

- Google Play has been only available for their Android not for [[[SteamOS]], Debian, etc.

Playing video games on Linux[edit]

- use PMS to install free and open-source video games available in the software repositories of your Linux distribution

- use Steam to purchase and install games distributed by Valve

- ...

What it offers[edit]

- To FOSS enthusiasts it offers:

- instead of writing more Windows-only free software let's turn this on his feet and advocate for more proprietary software for Linux. More free software on more proprietary walled gardens is rather pointless. It is time to have a more wide-spread open platform with Linux-only software and other strong unique selling propositions

- To game developers it offers:

- THE GOOD: all advantages and benefits of free and open-source software:

- e.g. back in the day, John D. Carmack wrote Utah GLX because DirectX ≤ version 8 was crap and because of the X11 crap on Linux

- For their Android OS, Google forked the Linux kernel, augmented this with binder, pmem, ashmem, etc., added libbionic on top and Dalvik on top of that. And Google was criticized: "Working with a community does not mean making the code available! Contributing means actively presenting your code to the people who work in that area and then participating in the discussions that fall out of it: The real contribution you are welcome to make to the development of the Linux kernel is not a high numbers of lines of code – it's letting us know about things we haven't thought about yet. And if we do work out how to solve these problems in a mutually satisfactory way then everyone wins. We like interesting problems. Maybe you are inventing new interfaces because you are dealing with problems we have not thought about yet. There is no way we can know what those problems are just by looking at the code. So always try to document anything important that you do. If you want to help make the Linux kernel better, remember: everyone wants to help, but not everyone is as knowledgeable as you. The community atmosphere is what counts, and educating each other is an important step bringing this project to new heights." But because Google forked the Linux kernel instead of waiting for their stuff (binder, ashmem, pmem, etc.) to be accepted into the Linux kernel mainline Android OS has become egregiously successful: During Q2 2013, 79.3% of smartphones sold worldwide were running Android.[1] Most probably both parties, the developers of Linux kernel mainline in not accepting Google's stuff, and Google in forking instead of waiting! libbionic is a different decision, which has overall disadvantageous consequences, the courts and Oracle Corporation have already proven that Dalvik was not such a good idea... Android has beaten iOS but will it compete with the other Linux kernel-based OSes: Tizen, Sailfish OS, etc.?

- Could new code or some new APIs make the Linux kernel better suited as the kernel of a gaming platform? Are DRI, ALSA, evdev, and Co. good enough for PC and mobile gaming? Can you make them better? Both approaches are possible: trying to get into mainline and possibly fighting a preference of code that rather benefits virtualization or cloud-computing, or going the Android-way and forking! From the 6,000 full-time professional developers who work on the Linux kernel, maybe 3 work full-time on ALSA! Please read Andrew Morton on Linux kernel#Development to comprehend why this so!

- In case you have an idea + some proof-of-concept code for the kernel, for GNU C Library/uClibc, for SDL/SFML or for OpenGL/OpenGL ES/Mantle (once Mantle is an open standard and ported to Linux) present it to the respective developer community

- THE OPPORTUNITY: Maybe have a look at latest generation of video game consoles, e.g. the PlayStation 4: GNM, GNMX and PlayStation Shader Language (PSSL) since it has been designed exclusively for video game development. Now the PC comprises a plethora of hardware configurations, both, your video game and the operating system have to support a certain range of these... In case want PC, you have already accepted this. Now answer:

- How should the OS be designed to suit game developers? Do SDL/SFML, OpenGL/Mantle, VOGL & Valgrind, GNU Compiler Collection & GNU Debugger, LLVM/Clang suffice or not? All are cross-platform, so you don't have to migrate for ever, just free yourself from Windows-only and then see from there.

- How should the OS be designed to be accepted by PC gamers? Multi-monitor-support? Oculus Rift-support? DualShock 4-support[2] What about the desktop environments? Do chubby-fingers GNOME, KDE Plasma 5, Unity, Cinnamon, Xfce or LXDE suffice?

- THE OPPORTUNITY: while some game developers prefer DirectX to programming low-level stuff, many serious game developers are fed up with DirectX! Maybe DirectX 12 will make them happy again, but until DirectX 12 is released, Linux as a gaming platform has a fair chance. In case DRI, ALSA, evdev and co are good enough, it would be a pity, if the design problems of Unity, chubby-fingers™-GNOME Shell and Co fucked up such a rather rare historical opportunity.

- THE BAD: the low installed base: most people loathe installing some Linux, when Windows always has come pre-installed and they already paid for their Windows license

- THE BAD: the low market share: only few hardware comes Windows-free, maybe even with some Linux pre-installed, mostly "Unity"-Ubuntu

- THE GOOD: all advantages and benefits of free and open-source software:

- To game distributors it offers:

- a guaranteed open platform that will never be a walled garden; OS X, iOS, Windows ≥ Windows 8, Amazon Kindle, all game consoles are walled gardens. Android less so...

- What about people who do invest money into it because they earn money with it? Red Hat doesn't do gaming, but Valve Corporation and Humble Bundle are present on Linux earning money. Maybe EA will port Origin to Linux. Would they rather compete on PlayStation 4 and OS X and Windows 8 or would they rather on Linux? Besides competing, there is surely some common ground: Microsoft is going walled garden, and DirectX lost finally lost its appeal to serious game developers.

- Would you rather compete on one of the walled gardens, or rather on an open platform?

- e.g. MS DOS was in the 80ies and 90ies a very attractive platform because, as a game developer, you could develop your game, as game development was magnitudes cheaper than today, offer a demo to download, your demo would spread and people would buy your software on storage media. Done. You needed nobodies permission or consent, though Video game publisher were also available.

- a platform that is free of cost; it can even be re-distributed (with source-code)

- To PC gamers it offers:

- an entire OS that is free of cost. That is 100 bucks more for peripheral hardware or games. May give you better performance. My be more secure (without X11). Offer more features. Or not. At least, you saved 100 bucks. In case you do not need a pre-installed Windows & Office & bla, why pay for it in the first place? How much does an Oculus Rift cost?

- if performance is not an issue, playing Windows games on Linux through Wine and with Direct3D 9.3 support in Mesa 3D is possible.

People have been playing free as well as commercial games on Linux since at least 1994: e.g. Doom. Yet, very few games have been ported to Linux. Why? DirectX is only available for Windows and also because Linux distribution have not cared about a long-time stable ABI. So for a very long time, 'Linux as a gaming platform has been neglected. A proof for this is this rather long list: List of game engine recreations; these talented people either can't afford to purchase Windows license, or they insist of playing those games on Linux. So Ryan C. Gordon is not the only one who has experience in game programming on Linux, though he may have extensive experience in porting games from Windows to Linux. ;-) "Do not use nasm; you can use readelf to show all libraries you link to."

Game developing for PC operating systems[edit]

Rendering calculations are outsourced over OpenGL to the GPU to be done in real-time. DRM/GEM/TTM regulate access and do book-keeping. It all seems superfluous when only a fullscreen 3D game is running, but hardware access is a fundamental kernel task.

- On all PC operating systems, the programmability of the GPU is quite difficult:

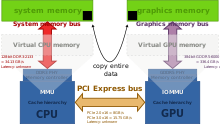

- PC operating systems are still stuck in a traditional model, of the CPU "spoon-feeding" a discrete GPU with commands

- graphics memory is hidden away from game developer → makes collaboration between CPU and GPU very very difficult

- game engines could be programmed to take advantage of all the hardware features, but they are not because the have to work through the software layers and APIs on top of the hardware

- the existing software interfaces on the PC: APIs (DirectX, OpenGL, etc.) and abstractions (DRI) evolve too slowly

- Wine adds another layer...

- Game Development with SDL 2.0 – video from 11 February 2014 by Ryan C. Gordon for developers migrating from Microsoft Windows to Linux/cross-platform development

What serious game developers want instead[edit]

All serious game developers want OS developers to take a different approach towards rendering APIs & device drivers and have been talking to the graphics hardware vendors for years to get this: a platform, that does not stand in the way and gives a game developer full access to the hardware if he wishes.

- game engines should be able to resume large parts of the responsibilities that rendering API & device driver has today

- driver should be as thin an abstraction over the hardware as possible, just enough to not force developers to distinctly program for Nvidia OR AMD OR Intel OR Mali OR PowerVR OR VideoCore

- key aspect: game engine has explicit control over (most) memory & abstracted hardware components

- Serious game developers would sacrifice OS security any time to performance

Especially Mantle offers a real benefit, and the laggard at AMD keeps it close for too long, waits to add Mantle to their Linux drivers too long, and benefit Microsoft Windows.

Video game consoles[edit]

Video game consoles have been designed conforming to the needs and wants of game developers. They additionally enforce some hard-to-break copy-protection. However, PC games do not exist, because it is easier to circumvent the copy protection but because some people do buy PC games. Curiously with the newest Wii U homebrew is hard to achieve, while simply circumventing the copy protection to pirate games is simple!!![3] The hardware is usually subsidized. Yet the PC as a gaming platform still exists. And while sales of desktop computers are said to drop, and many developers/publishers denounced exclusive titles, there are PC games. Why?

PC gaming on AMD Steam Machines[edit]

"Considering that Intel is perfecting it's 14nm production with second generation products already out of the gates while AMD is still stuck with 28nm sais a lot about the current competition situation.

After showing off with the XBox One and the PlayStation 4 but failing to bring Mantle to both..., why hasn't AMD brought out an APU with a TDP of 300 Watt combining:

- 4× 4GHz "Steamroler"-cores

- 20× GCN CUs (i.e. a Radeon R9 285 "Tonga PRO"-class GPU)

- IOMMUv2 to support Heterogeneous Memory Management

- 384-bit GDDR5 or 1024-bit HBM memory contoller

Why hasn't AMD

- ported their low-level Mantle API to Linux yet? (as AMD CodeXL, AMD GPUPerfAPI and AMD GPU PerfStudio have been already ported to Linux!)

- intensified their co-operation with the Valve Coportation?

- for clever driver programming to benefit from all the bandwidth and unified virtual memory adress space with low-level rendering API "Mantle"?

Instead we have lousy bottom-to-middleclass APU's with barely "good enough for Sims" GPU's that don't spark anyones interest."

The good[edit]

- develop for substantial numbers (millions) of customers all with identical computing and peripheral hardware, identical operating system, identical operating system version, identical configuration (since there is not much to configure...)

- expose (almost) all the hardware functionality to game developer not just some API, an extreme antonym is Gallium3D and Carmack's famous "layers of crap" makes sense

- grant explicit control over the entire memory

- stable prices for games

The bad[edit]

- game consoles significantly slow down the evolution of game programming: the PS2 (32 MiB + 4MiB) was released Mar 2000, the PS3 (256 MiB + 256 MiB) in Nov 2006 and the PS4 (8 GiB unified) in Nov 2013

- the adoption of new, sometimes even seminal features by game engines has been muted because of the lack of support in the console market

- fly in the ointment I: several PC gamers already adopted multi-monitor or 4k displays. If you can afford it, PC gaming it is.

- fly in the ointment II: if you think the Oculus Rift is amazing with its 1280×800, wait for future products with higher resolutions... even if Sony and Microsoft come up with own products, will the computational power suffice? How about the latency of ≤3ms? I don't think cloud-computing can cover this.

- fly in the ointment III: Titanfall gameplay video at 60fps on YouTube while experiments with Occulus Rift revealed that ≥ 95fps are required.

- 100-130ms latency??? http://www.slideshare.net/DevCentralAMD/gs4141-optimizing-games-for-maximum-performance-and-graphic-fidelity-by-devendra-raut

The ugly[edit]

- Any console poses a walled garden; they pay for exclusive games

- Mantle could technically run on PS4 and XBox One, but is not wanted there (walled garden)

- there are OpenGL wrappers available for the PlayStation 3 ...

- ... but their performance is considerably worse on the Cell, than the performance of Sony's custom API PSGL

- ... but game developers repeatedly stated, that they hate to program their game engines in a way, that they perform well on the Cell anyway

- ... but few games use OpenGL, more use PSGL, and the most use libGCM or even program to the bare metal

- PSGL might be based on OpenGL ES 1.0, but has a lot of hardware-specific extensions, since OpenGL ES 1.0 doesn’t even offer programmable shaders. Ironically enough PSGL is based on the Cg (programming language), which was developed by nVidia and Microsoft, and is closely related to Direct3D’s HLSL, but not to OpenGL’s GLSL).

- PS4 introduced new APIs: GNM and GNMX and also a new shader language: PSSL (PlayStation Shader Language). Ask Sony for documentation...

The hardware[edit]

- 1 Unified Shaders : Texture Mapping Units : Render Output Units

- 2 AMD: 1 CU (Compute Unit) = 64 USPs: 4 TMUs : 1 ROPs; Nvidia: 1 SMX = 192:8:4, 1 SMM = 128:8:4

- 3 Clustered Multi-Thread, one such "core" has only half the floating-point power (!), a "module" would have equal the FP, and double the integer power

| Hardware | CPU | GPU | Memory | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Product | Price | Microarchitecture | Cores | Frq (GHz) | Microarchitecture | Config core1 | Compute Units2 | Frq (MHz) | Type | Amount | Bus width (bit) | Bandwidth (GB/s) | Zero-copy |

| PlayStation 4 | € 400,– | customized Jaguar | 2x4 | 1.6 | GCN 1.1 | 1152:?:32 | 18 | 800 | GDDR5-5500 | 8 GiB | 256 | 176[4] | Yes |

| XBox One | € 500,– | customized Jaguar | 2x4 | 1.75 | GCN 1.1 | 768:?:16 | 12 | 853 | DDR3-2133 + ESRAM-3412 |

8 GiB + 32 MiB |

256 1024 |

68.3 204 |

No |

| Wii U | € 240,– | Espresso | 3 | 1.24 | Latte | 320:16:4 | 550 | DDR3-1600 + eDRAM |

2 GiB + 32 MiB |

64 1024 |

10.3 70 |

? | |

| Radeon R7 260 ("Bonaire") | € 100,– | – | GCN 1.1 | 768:48:16 | 18 | 1000 | GDDR5 | 1 GiB | 128 | 96 | Yes | ||

| Radeon R7 265 "(Curaçao PRO)" | € 120,– | – | GCN 1.1 | 1024:64:32 | 20 | 900 | GDDR5 | 2 GiB | 256 | 179.2 | Yes | ||

| Radeon R9 270 "(Curaçao PRO)" | € 140,– | – | GCN 1.1 | 1280:80:32 | 20 | 900 | GDDR5 | 2 GiB | 256 | 179.2 | Yes | ||

| Radeon R9 290X ("Hawaii XT") | € 330,– | – | GCN 1.1 | 2816:176:64 | 44 | 1000 | GDDR5 | 4 GiB | 512 | 320 | Yes | ||

| GeForce GTX Titan Black | € 855,– | – | Kepler | 2880:240:48 | 15 SMX | 890 | GDDR5 | 6 GiB | 384 | 336.4 | No | ||

| A10-7850K (Kaveri, FM2+, 95W) | € 150,– | Steamroller | 43 | 3.7 | GCN 1.1 | 512:32:8 | 8, R7 | 720 | DDR3-2133 | – | 128 | 35.32 | Yes |

| Core i7 4770R (BGA1364) | $ 392,– | Haswell | 4 | 3.2 | Iris Pro Graphics 5200 | 160:8:4 | 40 EUs | 1300 | DDR3-1600 128MiB eDRAM |

– | 128 | 25.6 50 |

No |

| Athlon 5350 (Kabini, AM1, 25W) | € 50,– | Jaguar | 4 | 2.05 | GCN 1.0 | 128:8:4 | 2, R3 | 600 | DDR3-1600 | – | 64 | 12.8[5] | No |

| A6 6310 (Beema, 15W) | ? | Puma | 4 | 2.0 | GCN 1.1 | 128:8:4 | 2, R4 | 800 | DDR3L-1866 | – | 64 | 15 | No |

| Tegra K1 | $ 192,– | Cortex-A15 | 4 | 2.4 | Kepler | 192:8:4 | 1 SMX | 950 | DDR3L | 2 GiB | 64 | 17 | No |

| Tegra M1 | — | Cortex/Denver | ? | Maxwell | 128:8:4? | 1 SMM | ? | No[6][7][8][9] | |||||

| Atom Z3580 | ? | Silvermont | 4 | 2.13 | PowerVR 6 (Rogue) G6430 | ? | 4 | 533 | LPDDR3-1066 | – | 64 | 8.5 | No |

- For € 400,– the PS4 hits a performance/price ratio sweet spot uncontested. When can we install Linux on the PS4? DualShock 4-support was mainlined in 3.15[2]

- AMD Kaveri Docs Reference Quad-Channel Memory Interface, GDDR5 Option, 49125_15h_Models_30h-3Fh_BKDG page 87 :"The following restrictions limit the DIMM types and configurations supported by the DCTs: GDDR5 memory is not supported." DCT = DRAM controller, this is I think the memory controller PHY which is on-die. Socket FP3 for netbooks and Socket FM2+ for desktops.

- Iris Pro Graphics 5200: whats latency? what the bandwidth? of the the 128 MiB eDRAM off-die? benchmarks with disabled/enabled LLC with DDR3-800 and DDR3-2133 would be nice. Addressing the Memory Bandwidth Problem

Various benchmarks[edit]

- Phoronix: E-350 Bobcat beats Athlon 5350 Benchmarks: Tegra K1 vs. Athlon 5350, Benchmarks: Tegra K1 vs. Atom Z530, Benchmarks: Tegra 4 vs. Atom Z3480

- Please keep in mind that hardware vendors have been repeatedly caught optimizing their device drivers to score better on popular benchmarks

- Please note, that benchmarking real-world applications should be preferred

- Please note, that some benchmarks tax only the CPU, while others concentrate on the GPU (also note the API overhead)

- Please note, that bottle-necks other than CPU or GPU are thinkable, e.g. the available memory bandwidth

- Please note, that latency it not benchmarked, but can still be important to the end-user

- Nvidia continues to support their proprietary Linux drivers in a way, that the customer cannot complain... i.e. they are stable and the performance is on par with the Windows drivers; sadly, it happened that features, that were present in the Linux driver were removed again, because they were not present in the Windows drivers; also question remains, whether the Nvidia Linux driver is as good as it possibly could be (e.g. on some video games, running on Linux clearly outperform themselves running on Windows.)

- There is only a Microsoft Windows-only implementation of Mantle, though this could change till the end of 2014: AMD Reportedly Plans To Bring Mantle To Linux, Calls Mantle An Open-Source API

Why HSA is a big deal[edit]

Memory bandwidth can be a huge limit to scaling processor graphics performance, especially since the GPU has to share its limited bandwidth to main memory with a handful of CPU cores. Intel's workaround with Haswell multi-chip modules was to pair the CPU/GPU with 128MiB of eDRAM yielding unimpressive 50GB/s additionally to the available 25GB/s from the main memory handled as victim cache to the L3 cache!

- The available memory bandwidth can be a serious the bottleneck which drastically prevents more performance, this potential to be a bottleneck is the very reason, why GDDR5 using SGRAM was developed over DDR3 using SDRAM. Some benchmarks proving showing such a case can be found on Phoronix.[5] But so far, only AMD's Kaveri and the PlayStation 4 (sic!) have HSA-features to facilitate zero-copy memory operations, making the PS4 hardware even more unique and interesting to develop for and on.

- Neither Intel nor Nvidia have joined the HSA Foundation so far, the own members need to push for a much bigger presence on the market to make this technology successful. Samsung, Qualcomm and Linaro are listed, so Linux and GCC and therefore also should be able to gain from available hardware-HSA-features in due time.

- Mantle has been designed from scratch by AMD and EA Digital Illusions CE, EA being a competitor to Valve on Windows; will Mantle – being only written with GCN in mind, which features an L2 cache which is capable of cache coherency, while pre-GCN microarchitectures (AFAIK) did not feature such an L2 cache – target HSA-hardware-featues?

For me, because of zero-copy. Memory throughput is the bottleneck on low-power, low-price hardware![5] Gaming PCs have distinct graphics cards, with their distinct graphics memory. In other devices – mobile devices, Intel desktop APUs do, AMD's desktop APUs do, Nvidia's Tegra, the game consoles – the CPU and the GPU share the physical memory and access it through the same memory controller. Question is, whether this memory is partitioned or unified. Albeit from using highly clocked GDDR5 memory over 256-bit or wider channels like the PlayStation 4, zero-copy is obviously something we need to save precious bandwidth and also significant amounts of electrical current. With zero-copy, instead of copying large data blocks, we pass (copy) pointers referring to their location and we are done.

Heterogeneous System Architecture introduced pointer-sharing between CPU and GPU, i.e. zero-copy. Neither Intel nor Nvidia are not part of the HSA Foundation, and I am not aware that their products (Atom SoCs, Tegra K1) facilitate zero-copy through some own technology. Anyway, the software probably need to make active use of this possibility, so it needs to rewritten. The rendering APIs (Direct3D, OpenGL, Mantle) may need augmentations as well. The PS 4 uses GNM, GNMX and PlayStation Shader Language. Obviously Direct3D and OpenGL have gotten more and more alternatives.

Of course, given that the GeForce GTX Titan Black has 336.4 GB/s, while the Kaveri has 35.32 GB/s, zero-copy could offer only a small an advantage. How big is effort-to-performance ratio of HSA-facilitated zero-copy with a Kaveri-based computer? How big with the PlayStation 4?

Has anybody statistics on how many copy's between main memory and graphics memory could be saved? PCIe 3 does not seem to be the bottleneck, the system memory is. Why not do it like the PS 4?

The PlayStation 4 hardware, not the Sony walled garden, is IMHO really in the sweet-spot of price to computing power to power consumption. BUT maybe 2 years from now, maybe sooner, €400,– hardware with much more computing power will be available without the walled garden. Whether it runs Windows only, which is in the process of becoming a walled garden, or is capable of running Linux will be interesting for the hardware vendor.

Valve is pricing the PC gaming market as awesome, because it lacks a walled garden (Microsoft is rapidly changing that for the benefit of Linux), but also because people update their hardware more often then every 6 years. Some PC gamers also invest more into their hardware.

And while the Oculus Rift could be much more immersive then a 4K resolution display, all, the Rift, 4K resolution-display, and the Rift's successors need much more computational power than we can buy today! This is good news for at least Nvidia and AMD. Maybe Intel and Vivante as well. The Oculus Rift additionally needs a low-latency software stack, so no "cloud-computation-crap". A 4K resolution-display might not offer more fun then a 1080p display, but a 4K resolution Occulus Rift is probably far superior to a 1080p Occulus Rift! I do not see Kaveri-like computers generating the necessary power with 95Watt TDP and 35.32 GB/s for CPU and GPU. Kaveri-like computers are low-price systems, that simply generate more computing power due to removing the memory throughput-bottleneck partly or completely then similar priced systems without zero-copy. The PS4 costs € 400,– total, with casing and power-supply. So I am curious about hardware like the PS4, without the walled-garden stuff in one, two, three years from now. The GeForce GTX Titan Black costs € 850,–, way over what I am willing to pay for a mere graphics card. Kaveri-stuff, is all about price-to-power ratio ("bang for the buck") and power consumption-to-power ratio ("bang for the watt"). I'd rather invest less into IC, which could be replaced every two years, and more for the peripherals.

- Nintendo hit a spot with their Wii (while the Wii U is probably a mistake).

- virtual reality head-mounted display is similar, a game changer, but at a much higher price than the Wii.

- Microsoft's Kinect and Sony's counterpart came later. Not only wouldn't many people accept a camera connected to the Internet and driven by propriety walled garden operating system in their living rooms, ... MUAHAHA ..., but at least the Wii already failed to implement their motion control in a "fun" manner in more complicated "light saber" games. It worked only in simple games. The concept is fun though, and seem like a good augmentation to VR-head-mounted displays.

Adoption[edit]

- List of games with Oculus Rift support Real-time rendering

- game developers want results / developer hour

- game publishers want results / investment

- game players want fun, immersion, competition, ...

- Glide API was quite successful

- 1996-06-22 Quake for MS-DOS

- Some handheld game console adopted Linux, e.g. GP2X, Pandora and Neo Geo X. Nvidia Shield runs Android.

- The Debian repos contain a lot of the available open-source video games prepackaged, and they run out-of-the-box. Some are missing, like e.g. Rigs of Rods. Also note, that the Wikipedia does not have articles on several of the games, that are either noteworthy or simply: fun.

- Mesa 3D-drivers look better and better, but you should not use them for high-performance games, but download and install the proprietary ones, e.g. AMD Catalyst.

- you can buy Games for Linux on CD, install them and play (there is no need for Wine!) ;-)

- you can install Steam for Linux and use that to purchase games

Valve Corporation[edit]

Valve Corporation wants to establish Linux as gaming platform. They ported Steam to the Linux platform. Valve's motivation:

- Valve has a digital distribution platform, called Steam. Steam competes with

- Media Markt and other retailers

- Amazon.com and other online retailers

- Mac App Store, Google Play, Windows Store and other digital distribution platforms.

- potentially any of the "big" publishers: Ubisoft, a game publisher, could once have been interested in "cloud gaming"/"cloud-based distributing"/cloud-buzzwod-bingo, as can read e.g. here. As I see it, this could easily become a competition to Valve's Steam.

- The operator of a digital distribution platform want's to make money, exactly like Media Markt want's to make money! There are exceptions, e.g. software repositories of Linux distributions accessible via the respective package management system or via ftp.

- Most Linux distributions do not aim to make money, and the entire operating system is build around the package management system. From a point of view of security, system stability this is awesome!

- The commercial digital distribution platforms aim to make money like Media Markt and their ought to compete like Media Markt!!! But since, Mac App Store, Windows Store and Google Play are operated by the same corporations, that also develop and sell the underlying operating system, there is always the danger of discrimination! And the developer of the operating system has many ways to put competing "digital distribution platform" of at a disadvantage. Some people complain, e.g. Serious Sam developer rails against “Walled Garden” of Windows 8 and more, Valve want's to compete, and a way to ensure, that it will at any time have the possibility to evade discrimination, so that it can continue competing, is to re-base their Steam to an operating system, where they cannot be bullied. To this effect, the software they are marketing and distributing has to run on this new operating system as well, or at least, should be easily portable to it. Replacing DirectX with OpenGL lessens the workload to port a game. Wine is a crutch, but an option for high-performance games. To work well, they have to be ported to the Linux kernel API (and not necessarily the POSIX-API!) in conjunction with something like SDL or SFML.

- Valve does not have the market power to pressure other companies to go this way. Valve can do two things:

- identify and mend the short-comings of "Linux as gaming platform" (i.e. the Linux kernel, OpenGL and its the implementation in the proprietary device drivers, and some API for sound and input, defined and implemented in SDL, SFML, etc.).

- convince (not persuading) game developers (i.e. possible customers of their distribution platform) of the technical and financial merits of switching!

- Valve is working on SteamOS, a Debian-fork, so they won't go down the same road as Google with their bionic stuff.

- Valve is working on ironing out short comings of Linux as a gaming platform:

- the financed the further development of Simple DirectMedia Layer 2.0 and induced its release under a non-copyleft license

- a debugger for game developers: glslang?

- For Linux there are VOGL and Valgrind

Rendering APIs[edit]

Moved to User:ScotXW/Rendering APIs

Multi-monitor[edit]

Yes, multi-monitor setup is supported on Linux at least by AMD Eyefinity & AMD Catalyst, Xinerama and RandR on both X11 and Wayland. Games e.g. Serious Sam 3: BFE.

Existing open-source engine and free content video games[edit]

User:ScotXW/Free and open-source software#Free and open-source game engines with free content

Tools to create game content:

- Wolfenstein 3D - Director's Commentary with John Carmack – John Carmack comments on Wolfenstein 3D which was released on May 5, 1992, for DOS

- the implementation of some copy protection can be ""walled garden""-like, but the walled garden does not have to engulf the entire operating system!

- QuakeCon 2012 – John Carmack Keynote – 3 hours, there are better videos with him explaining stuff and talking 'bout the past (John D. Carmack wrote Commander Keen)

Adoption of Linux as gaming platform by game engines[edit]

Here is a table of games engine in current and future video games: (see more here: Template:Video game engines)

Note: Platform refers to the software, not to the hardware! The only hardware-parameter relevant are computing power and available peripherals (the Human–machine interfaces).

Hence it is tempting to mention PlayStation Vita, Pandora or Nvidia Shield. See maybe Template:Handheld game consoles. Especially Linux-powered devices, besides Homebrew and TiVo-like, are interesting, because they care for the development of the Linux platform, making the available computing power, the only barrier. That means, that a game engine that some solid performant Linux support, will run on any platform, such as the Pandora or its successors.

| Game engine | Interfaces | Supported "platforms" | Programming | Licensing | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Icon | Name | Rendering paths | Audio, Input, etc. | Linux | Windows | Mac OS X | Sony | Microsoft | Nintendo | Other | Build | Programming language |

Programming language bindings |

Scripting | FOSS | License | ||||||

| Vulkan | OpenGL | Direct3D | SDL/SFML/etc. | DirectX | PS3 | PS4 | XBox 360 | XBox One | Wii | Wii U | ||||||||||||

|

Frostbite | WIP | ✘ | Yes | ? | Yes | Yes | Yes | Yes | ? | ? | ? | ? | ? | ? | ? | C? | C++? | ? | No | proprietary | |

| id Tech 6 | ✔ | Yes | ? | ? | Yes | WIP | Yes | Yes | Yes | Yes | Yes | Yes | ? | ? | ? | C++, AMPL, Clipper | ? | ? | No | proprietary | ||

| CryEngine | WIP | Yes | Yes | ? | Yes | Yes | Yes | Yes | ? | ? | ? | ? | ? | ? | ? | C++? | ? | ? | No | proprietary | ||

| Unigine | WIP | Yes | Yes | ? | Yes | Yes | Yes | Yes | ? | ? | ? | ? | ? | ? | ? | C++? | ? | ? | No | proprietary | ||

|

Unreal Engine | WIP | Yes | Yes | ? | Yes | Yes | Yes | Yes | ? | ? | ? | ? | ? | ? | ? | C++? | ? | ? | No | proprietary | |

| Unity | WIP | Yes | Yes | ? | Yes | Yes | Yes | Yes | ? | ? | ? | ? | ? | ? | ? | C++? | ? | ? | No | proprietary | ||

| 4A Engine | WIP | Yes | Yes | ? | Yes | Yes | Yes | Yes | ? | ? | ? | ? | ? | ? | ? | C++? | ? | ? | No | proprietary | ||

| dhewm3 | WIP | Yes | ? | SDL, OpenAL | ? | Yes | Yes | Yes | Yes | ? | Yes | ? | ? | ? | ? | CMake | C++? | ? | ? | Yes | free and open-source | |

| DarkPlaces engine | an Id Tech 1-fork, adopted by Nexuiz and Xonotic | |

| dhewm3 | an id Tech 4-fork (Doom 3 engine) | |

| iodoom3 | another id Tech 4-fork | |

| ioquake3 | an id Tech 3-fork (Quake III engine) | |

| OpenMW | an open-source engine re-implementation of The Elder Scrolls III: Morrowind (Gamebryo) https://openmw.org/en/ | |

| RBDoom3BFG | a community port of id Software's Doom 3 BFG Edition game to Linux. | |

| Spearmint | a further visually advanced version of IOquake3 | |

| Daemon | a combination of the ioquake3 and ETXreaL open-source game engines with many advanced modifications, used by Unvanquished | |

| Watermint | a fork of the Spearmint engine that seeks to ship a realistic open-source game. |

Future[edit]

John D. Carmack repeatably talked about (finaly) adopting ray-tracing over rasterizing in video games to do the rendering.

- Quake Wars: Ray Traced – research by Intel using Carmack's Quake engine

- Wolfenstein: Ray Traced – research by Intel using Carmack's Quake engine

- OptiX – proprietary Nvidia software, claimed to do ray-tracing, but using CUDA-API; like Mantle, CUDA is still only available for Nvidia (and it actually competes with OpenCL)

Technical POV[edit]

This article refers to doing the rendering-computation fast enough, so that the series of rendered images induce the illusion of movement in the human brain of the user. This illusion allows for the interaction with the software doing the calculations taking into account user input. The unit used for measuring the frame rate in a series of images is frames per second (fps). Different techniques for rendering exist, e.g. ray-tracing and rasterizing.

Technical Questions[edit]

Comparing

- this: http://www.phoronix.com/scan.php?page=article&item=nvidia-open-linux44&num=3

- with the technical specs [[1]].

Why is the GeForce GTX 780 Ti (GK110-425-B1) a) slower then the GeForce GTX 680 (GK104-400-A2), and why is the b) discrepancy bigger? This kind of behavior proves how hard it must be to write graphics device drivers.

Then again the same behavior with AMD:

References[edit]

- ^ "Android Nears 80% Market Share In Global Smartphone Shipments".

- ^ a b "HID for 3.15". 2014-04-02.

- ^ http://fail0verflow.com/media/30c3-slides/#/

- ^ "Interview with PS4 system architect". 2013-04-01.

- ^ a b c "DDR3-800 to DDR3-1600 scaling performance AMD's Athlon 5350".

- ^ "No unified virtual memory in Maxwell".

- ^ http://www.anandtech.com/show/7515/nvidia-announces-cuda-6-unified-memory-for-cuda

- ^ http://www.gamersnexus.net/guides/1383-nvidia-unified-virtual-memory-roadmap-tegra-future

- ^ http://wccftech.com/nvidia-previewing-20nm-maxwell-architecture-unified-memory-architecture-gtc-2014-demo-upcoming-geforce-driver/

- ^ "Kaveri microarchitecture". SemiAccurate. 2014-01-15.