User:ZachsGenericUsername/sandbox/Deep reinforcement learning

| This is not a Wikipedia article: It is an individual user's work-in-progress page, and may be incomplete and/or unreliable. For guidance on developing this draft, see Wikipedia:So you made a userspace draft. Find sources: Google (books · news · scholar · free images · WP refs) · FENS · JSTOR · TWL |

Deep reinforcement learning (DRL) is a machine learning method that takes principles from both reinforcement learning and deep learning to obtain benefits from both.

Deep reinforcement learning has a large diversity of applications including but not limited to video games, computer science, healthcare, and finance. Deep reinforcement algorithms are able to take a huge amount of input data (e.g. every pixel rendered to the screen in a video game) and decide what action needs to take place in order to reach a goal.[1]

Overview[edit]

Reinforcement Learning[edit]

Reinforcement learning is a process in which an agent learns to preform an action through trial and error. In this process, the agent receives a reward indicating whether their previous action was good or bad and aims to optimize their behavior based on this reward.[2]

Deep Learning[edit]

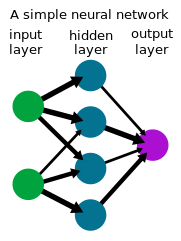

Deep learning is a form of machine learning that utilizes a neural network to to transform a set of inputs into a set of outputs via an artificial neural network.

Deep Reinforcement Learning[edit]

Deep reinforcement learning combines both the techniques of giving rewards based on actions from reinforcement learning and the idea of using a neural network to process data from deep learning.

Applications[edit]

Deep reinforcement learning has been used for a variety of applications in the past, some of which include:

- The AlphaZero algorithm, developed by DeepMind, that has achieved super-human like performance in many games.[3]

- Image enhancement models such as GAN and Unet which have attained much higher performance compared to the previous methods such as super-resolution and segmentation[1]

Training[edit]

In order to have a functional agent, the algorithm must be trained with a certain goal. There are different techniques used to train agents, each having their own benefits.

Q-Learning[edit]

Q-learning networks are learning algorithms without a specified model that analyze a situation and produce an action the agent should take.

Q-learning attempts to determine the optimal action given a specific state. The way this method determines the Q value, or quality of an action, can be loosely defined by a function taking in a state "s" and and action "a" and outputting the perceived quality of that action:

The training process of Q-learning involves exploring different actions and recording the table of q values that correspond state and actions. Once this agent is sufficiently trained, the table should provide an accurate representation of the quality of actions given their state.[4]

Deep Q-Learning[edit]

Deep Q-learning takes the principles of standard Q-learning but approximates the q values using an artificial neural network. In many applications, there is too much input data that needs to be accounted for (e.g. the millions of pixels in a computer screen) which would make the standard process of determining every the q values for each state and action take a large amount of time. By using a neural network to process the data and predict a q value for each available action, the algorithms can be much faster and subsequently, process more data.[5]

Challenges[edit]

There are many factors that cause problems in training using the reinforcement learning method, some of which are listed below:

Exploration Exploitation Dilemma[edit]

The exploration exploitation dilemma is the problem of deciding whether to pursue actions that are already known to yield success or explore other actions in order to discover greater success.

In the greedy learning policy the agent chooses actions that have the greatest the q value for the given state:With this solution, the agent may get stuck in a local maximum and not discover possible greater success because it only focuses maximizing the q value given its current knowledge.

In the epsilon-greedy method of training, before determining each action the agent decides whether to prioritize exploration, taking an action with an uncertain outcome for the purpose of gaining more knowledge, or exploitation, picking an action that maximize the q value. At every iteration, a random number between zero and one is selected. If this value is above the specified value of epsilon, the agent will choose a value that prioritizes exploration, otherwise the agent will select an action attempting to maximize the q value. Higher values of epsilon will result in a greater amount of exploration.[6]

Accounting for the agent's increasing competence over time can be done by using a Boltzmann Distributionlearning policy. This works by reducing the amount of exploration over the duration of the training period.

Another learning method is Simulated Annealing. In this method, like previously, the agent decides to explore a unknown action or choses an action with the greatest q value based on the equation below:

In this method as the value T decreases, the more likely the agent is to pursue known beneficial outcomes.[6]

Frequency of Rewards[edit]

In training reinforcement learning algorithms, agents are rewarded based on their behavior. Variation in the frequency and what occasions that the agent is awarded at can have a large impact on the speed and quality of the outcome of training.

When the goal is too difficult for the learning algorithm to complete, they may never reach the goal and will never be rewarded. Additionally, if a reward is received at the end of a task, the algorithm has no way to differentiate between good and bad behavior during the task.[7] For example, if an algorithm is attempting to learn how to play pong and they make many correct moves but they ultimately loose the point and they are rewarded negatively, there is no way to determine what movements of the paddle were good and what moves were not good due to the reward being too sparse.

Bias–Variance Tradeoff[edit]

When training a machine learning model, there is a tradeoff between how well the model fits training data and how well it generalizes to fit the actual data of a problem. This is known as the bias-variance tradeoff. Bias refers to how simple the model is. A high amount of bias will result in a poor fit to most data because it is not able to reflect the complexity of the data. Variance however is how accurately the model fits the training data. A high amount of variance will lead to an overfitting model which will then not be able to be generalized to more data because it will be too specific to the training set of data. This problem means it is important to reduce the bias and variability to find a model that represents the data as simple as possible to be able to generally the data past the training data, but without lacking the complexity of the data.[8]

Optimizations[edit]

Reward Shaping[edit]

Reward shaping is the process of giving an agent intermediate rewards that are customized to fit the task it is attempting to complete. For example, if an agent is attempting to learn the game Atari Breakout, they may get a positive reward every time they successfully hit the ball and break a brick instead of successfully completing a level. This will reduce the time it takes an agent to learn a task because it will have to do less guessing. However, using this method reduces the ability to generalize this algorithm to other applications because the rewards would need to be tweaked for each individual circumstance, making it not an optimal solution.[9]

Curiosity Driven Exploration[edit]

The idea behind curiosity driven exploration is giving the agent a motive to explore unknown outcomes in order to find the best solutions. This is done by "modify[ing] the loss function (or even the network architecture) by adding terms to incentivize exploration"[10]. The result of this is models that have a smaller chance of getting stuck in a local maximum of achievement.

Hindsight Experience Replay[edit]

Hindsight experience replay is the method of training that involves storing and learning from previous failed attempts to complete a task beyond just a negative reward. While a failed attempt may not have reached the intended goal, it can serve as a lesson for how achieve the unintended result.[11]

Generalization[edit]

Deep reinforcement learning excels at generalization, or the ability to use one machine learning model for multiple tasks.

Reinforcement learning models require an indication state in order to function. When this state is provided by a artificial neural network, which are good at dictating features from raw data (e.g. pixels or raw image files), there is a reduced need to predefine the environment, allowing the model to be generalized to multiple applications. With this layer of abstraction, deep reinforcement learning algorithms can be designed in a way that allows them to become generalized and the same model can be used for different tasks.[12]

References[edit]

- ^ a b Deep reinforcement learning fundamentals, research and applications. Dong, Hao., Ding, Zihan., Zhang, Shanghang. Singapore: Springer. 2020. ISBN 978-981-15-4095-0. OCLC 1163522253.

{{cite book}}: CS1 maint: others (link) - ^ Parisi, Simone; Tateo, Davide; Hensel, Maximilian; D'Eramo, Carlo; Peters, Jan; Pajarinen, Joni (2019-12-31). "Long-Term Visitation Value for Deep Exploration in Sparse Reward Reinforcement Learning". arXiv:2001.00119 [cs, stat].

- ^ "DeepMind - What if solving one problem could unlock solutions to thousands more?". Deepmind. Retrieved 2020-11-16.

- ^ Violante, Andre (2019-07-01). "Simple Reinforcement Learning: Q-learning". Medium. Retrieved 2020-11-16.

- ^ Ong, Hao Yi; Chavez, Kevin; Hong, Augustus (2015-10-15). "Distributed Deep Q-Learning". arXiv:1508.04186 [cs].

- ^ a b Voytenko, S. V.; Galazyuk, A. V. (2007-02). "Intracellular recording reveals temporal integration in inferior colliculus neurons of awake bats". Journal of Neurophysiology. 97 (2): 1368–1378. doi:10.1152/jn.00976.2006. ISSN 0022-3077. PMID 17135472.

{{cite journal}}: Check date values in:|date=(help) - ^ Parisi, Simone; Tateo, Davide; Hensel, Maximilian; D'Eramo, Carlo; Peters, Jan; Pajarinen, Joni (2019-12-31). "Long-Term Visitation Value for Deep Exploration in Sparse Reward Reinforcement Learning". arXiv:2001.00119 [cs, stat].

- ^ Singh, Seema (2018-10-09). "Understanding the Bias-Variance Tradeoff". Medium. Retrieved 2020-11-16.

- ^ Wiewiora, Eric (2010), Sammut, Claude; Webb, Geoffrey I. (eds.), "Reward Shaping", Encyclopedia of Machine Learning, Boston, MA: Springer US, pp. 863–865, doi:10.1007/978-0-387-30164-8_731, ISBN 978-0-387-30164-8, retrieved 2020-11-16

- ^ Reizinger, Patrik; Szemenyei, Márton (2019-10-23). "Attention-based Curiosity-driven Exploration in Deep Reinforcement Learning". arXiv:1910.10840 [cs, stat].

- ^ Andrychowicz, Marcin; Wolski, Filip; Ray, Alex; Schneider, Jonas; Fong, Rachel; Welinder, Peter; McGrew, Bob; Tobin, Josh; Abbeel, Pieter; Zaremba, Wojciech (2018-02-23). "Hindsight Experience Replay". arXiv:1707.01495 [cs].

- ^ Packer, Charles; Gao, Katelyn; Kos, Jernej; Krähenbühl, Philipp; Koltun, Vladlen; Song, Dawn (2019-03-15). "Assessing Generalization in Deep Reinforcement Learning". arXiv:1810.12282 [cs, stat].