Wikipedia:Reference desk/Archives/Mathematics/2020 July 21

| Mathematics desk | ||

|---|---|---|

| < July 20 | << Jun | July | Aug >> | Current desk > |

| Welcome to the Wikipedia Mathematics Reference Desk Archives |

|---|

| The page you are currently viewing is a transcluded archive page. While you can leave answers for any questions shown below, please ask new questions on one of the current reference desk pages. |

July 21[edit]

Belief update of partially observable Markov decision process (POMDP)[edit]

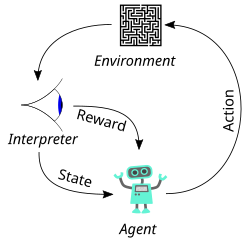

The belief update for a POMDP in the article states that maintaining a belief over the states solely requires knowledge of the previous belief state, the action taken, and the current observation. And the given formula to update the belief is:

so how to figure out , in particular when the agent cannot directly observe the underlying state and ? - Justin545 (talk) 08:09, 21 July 2020 (UTC)

- The variables and are bound variables, both ranging over the whole state space . The first is bound to the argument of , the second to the summation index. --Lambiam 12:23, 21 July 2020 (UTC)

- And the conditional transition probabilities of the process given by are assumed to be known. --Lambiam 14:27, 21 July 2020 (UTC)

- It was my oversight. Thanks for reminding me that and are to some extent known variables. Are assumed to be known because they are also a kind of belief that could be randomly initialized and repeatedly improved by some update? - Justin545 (talk) 02:19, 24 July 2020 (UTC)

- In the belief-update step, the parameters determining the process – with the exception of , which is irrelevant here – are assumed to be known. The model itself is agnostic on the issue where this knowledge stems from. It could be a priori knowledge. Given enough runs, it may be possible to compute estimates of an a priori unknown from the partial observations, such as by Bayesian inference, but that is an entirely different problem. --Lambiam 06:19, 24 July 2020 (UTC)

- It was my oversight. Thanks for reminding me that and are to some extent known variables. Are assumed to be known because they are also a kind of belief that could be randomly initialized and repeatedly improved by some update? - Justin545 (talk) 02:19, 24 July 2020 (UTC)

- I suppose that the agent ought to have no idea about and when solving the POMDP in order to make POMDP a useful model for many (probably reinforcement learning) applications. I'm afraid I don't see the difference between ordinary MDP and POMDP if and are all known knowledge

(either a priori or a posteriori)for the agent while it's solving a POMDP... Among other things, I thought the upside of studying POMDP is due to the partial observability (where the agent cannot directly observe the underlying state) it introduces isn't it? Imaging an agent is trying to win a poker game, and should be all unknown to the agent if I understand correctly. - Justin545 (talk) 08:58, 24 July 2020 (UTC)- Like all models, this one has a limited applicability. Note that there is only one agent, and the purpose is to determine the optimal (sequence of) action(s) to take in the face of uncertainty. Think of a doctor treating a patient with an uncertain diagnosis, having to choose from a variety of treatment options, each with uncertain effectiveness. I hope that this doctor does not view the problem as an exercise in reinforcement learning. You can make the model as complicated as you wish, with multiple agents that may also be adversarial, while also turning it into an estimation problem. Then it becomes something else, with a theoretically wider applicability but perhaps practically unmanageable. --Lambiam 10:46, 24 July 2020 (UTC)

- I suppose that the agent ought to have no idea about and when solving the POMDP in order to make POMDP a useful model for many (probably reinforcement learning) applications. I'm afraid I don't see the difference between ordinary MDP and POMDP if and are all known knowledge

- If there existed a model for artificial general intelligence (AGI), it's applicability might be unlimited. Unfortunately, are assumed to be known in POMDP (a big flaw?), so it's unlikely to be a model for AGI in my opinion. - Justin545 (talk) 10:10, 26 July 2020 (UTC)

- Brute-force algorithms like genetic programming (a variation on genetic algorithm) might be general-purpose. But their resource usage (time and/or CPU) are amazing (we need quantum computers!) which makes them somewhat unfeasible as a resolution for AGI. (and the algorithms may also produce dangerous results) - Justin545 (talk) 10:28, 26 July 2020 (UTC)

- Take the Monty Hall problem. If the three doors are transparent, this can be solved as a trivial MDP, since the contestant can observe the state. Every MDP is (trivially) also a POMDP. With opaque doors, it can be modeled as a (very simple) POMPD with the same state space as before, but this is a different process than the MPD cast as a POMPD. As the Belief MDP section of our article shows, any POMPD can be modeled as an MDP, but not with the same state space as before. So while we have mappings between these frameworks, they are not isomorphisms. --Lambiam 08:18, 26 July 2020 (UTC)