Wikipedia:Reference desk/Archives/Mathematics/2008 February 20

| Mathematics desk | ||

|---|---|---|

| < February 19 | << Jan | February | Mar >> | February 21 > |

| Welcome to the Wikipedia Mathematics Reference Desk Archives |

|---|

| The page you are currently viewing is an archive page. While you can leave answers for any questions shown below, please ask new questions on one of the current reference desk pages. |

February 20

[edit]zeros of a periodic function

[edit]Is there any way to find an which solves the equation

for any arbitrary and ? I know that some value of x will make both and equal to an odd multiple of but can't think of a way to find this value. I know that the answer may lie in recasting the equation in the form

and in this case know that some value of x makes this product of cosine terms equal to negative one, but again can't think of how to find this x value. Can this be solved analytically or must I resort to numerical calculation (which would be very unsatisfying). Thank you very much for your assistance. —Preceding unsigned comment added by 128.223.131.21 (talk) 00:21, 20 February 2008 (UTC)

- If the combined function is periodic, then a/b is a rational number, so after rescaling x, you can assume a and b are relatively prime integers. Then I believe x=pi is a solution if both a,b are odd, and there is no solution if one of a or b is even. JackSchmidt (talk) 01:16, 20 February 2008 (UTC)

- To explain JackSchmidt's answer a bit: since (for ) , the only way to have is to have . So , so and the simplest form of must have both numbers odd. When that condition is satisfied, there is some smallest integer ; k is the common denominator for a and b. Any with integer l solves the equation. --Tardis (talk) 16:20, 20 February 2008 (UTC)

Two questions about the above-named 1959 Walt Disney film. (Question 1) In the film, a character incorrectly states the value of pi. Does anyone know how a Disney film that was designed to be a mathematics educational film for children (and presumably had math consultants working on it) could possibly contain such an error? How would that error slip by unnoticed? (Question 2) With regard to that error, someone stated (in a blog) that it was not really an error, since "the value of pi has changed so much since 1959". That cannot possibly be true, can it? I am sure that we have become enlightened about the accurate values of many digits of pi over the course of thousands of years of history ... but not since 1959, correct? Any ideas? Thanks. (Joseph A. Spadaro (talk) 04:10, 20 February 2008 (UTC))

- Pi is an irrational number meaning that its' decimal representation will have and infinite amount of digits. Given the fact that we can never calculate an infinite amount of digits it is the case that new digits have been calculated since 1959. Of course this does not mean the the value of pi has changed, just the precision with which one can write it. Perhaps this is what 'someone' was reffering too? Any errors in the calculation of digits of pi since 1959 would, i suspect, occur at least several thousand (being conservative here) digits out so it would in fact be an error. I have no input on how the error actually occured. —Preceding unsigned comment added by 74.242.224.136 (talk) 04:55, 20 February 2008 (UTC)

- The error could very well have been caused by something as simple as the voice actor getting lost while reading the digits. —Bkell (talk) 05:00, 20 February 2008 (UTC)

Thanks. Let me follow-up. I should have made this clearer. (Though, remember, we are dealing with a Disney children's video.) First ... the value of pi was recited to, perhaps, 15 or 20 decimal places ... certainly, no more than 20. So, since 1959, nothing has "changed" in that early chunk of the number - correct? Second ... regarding the voice actor flub. Certainly, that is a possibility. I guess my point was ... is not that an "inexcusable" error (that would call for a retake ... or, at the very least, a dubbed edit in post-production), given that it is an education video, and a math one to boot ( ... as opposed to, say, some character misstating the digits of pi on an inconsequential TV episode of Seinfeld) ...? Thanks. (Joseph A. Spadaro (talk) 05:16, 20 February 2008 (UTC))

- Pi was known to 527 digits by the end of the 19th century, and to 2037 digits by 1949 (a decade earlier than the film was made). Our article on Donald in Mathmagic Land says that the bird only recites "the first few digits" of pi. The error was not due to pi not being known accurately enough in 1959. MrRedact (talk) 05:25, 20 February 2008 (UTC)

- Probably the producers didn't consider it important. If the voice actor flubbed, either no one noticed or no one thought it was worth it to rerecord the line just to be pedantic. The character reciting pi is basically a throw-away gag anyway. They got the first few digits right (out to maybe eight or ten places, if I remember correctly), and that's certainly more than anyone really needs. The purpose of the film is not to teach children the digits of pi but to introduce them to some general mathematical concepts. —Bkell (talk) 06:12, 20 February 2008 (UTC)

Yes, I agree with all that is said above. However, as an education video --- I am not sure that being "pedantic" is a bad thing ... quite the contrary, I'd assume. I will also assume that Disney's multi-zillion dollar fortune could easily afford any "expensive" retakes. Finally, mathematicians are nothing, if not precise. I can't imagine a mathematician letting that glaring error slide. A film producer, yes ... a mathematician, nah. (Joseph A. Spadaro (talk) 06:56, 20 February 2008 (UTC))

- I agree completely. This is just a symptom of a much more general problem. Very few people care about spreading misinformation, and very many then wonder how come people are so misinformed. -- Meni Rosenfeld (talk) 10:45, 20 February 2008 (UTC)

- What a succinct -- yet woefully accurate -- synopsis, Meni. Bravo! (Joseph A. Spadaro (talk) 16:20, 20 February 2008 (UTC))

- As Bertrand Russell appears to have written: 'PEDANT—A man who likes his statements to be true.' Algebraist 10:55, 21 February 2008 (UTC)

Thanks for all the input. Much appreciated. (Joseph A. Spadaro (talk) 01:05, 24 February 2008 (UTC))

Predicate logic

[edit]I have to show, using a transformational proof (logical equivalence and rules of inference) that the following two premises are a contradiction:

- 1.

- 2.

Now these two statements look quite similar because they were actually two more complex statements I tried to "whittle down" into a similar form in order to see where I could take it. They almost contradict one another apart from the part in 1. versus the part in 2.

Can you offer me a suggestion for manipulating these statements further to show a clear contradiction? My first line of thought would be to show that the conjunction, , of the two statements is false but I get bogged down in symbols. Perhaps there is a more elegant way using inference rules, I'm not sure. Damien Karras (talk) 13:39, 20 February 2008 (UTC)

- Start by consulting De Morgan's laws, including the ones for quantifiers, if you're not familiar with them. Then you should be able to make use of the fact that . Also, it may not be strictly necessary, but consider what it means to say that . --Tardis (talk) 16:34, 20 February 2008 (UTC)

- The two premises are contradictory if (and only if) (2) implies the negation of (1). You will have to use somehow, directly or indirectly, a rule that says that implies . If you have a logic in which the domain of predicate P can be empty, so that this implication does not hold, while the domain of the predicates Q and R, from which variable x assumes its values, is not necessarily empty, the two premises are not contradictory: take for Q the constant always-true predicate and for R the always-false predicate; the premises then simplify to and . I don't know the precise rules you are allowed to use, but the logical connectives and , as well as existential quantification, are all "monotonic" with respect to implication, so that

- is a consequence of

- --Lambiam 09:58, 21 February 2008 (UTC)

- The two premises are contradictory if (and only if) (2) implies the negation of (1). You will have to use somehow, directly or indirectly, a rule that says that implies . If you have a logic in which the domain of predicate P can be empty, so that this implication does not hold, while the domain of the predicates Q and R, from which variable x assumes its values, is not necessarily empty, the two premises are not contradictory: take for Q the constant always-true predicate and for R the always-false predicate; the premises then simplify to and . I don't know the precise rules you are allowed to use, but the logical connectives and , as well as existential quantification, are all "monotonic" with respect to implication, so that

- Thanks both, and let it be known that P, Q, and R share the same domain. Lambiam, I assume what you're saying is that if, essentially, if I prove I can show the two statements are a clear contradiction? Damien Karras (talk) 13:58, 21 February 2008 (UTC)

- I still don't understand what I have to do. If in one statement and in another, doesn't that show that the two sides are dependent on and . I just don't get this at all. If is true, then so is the existential quantification. If it is false then the existential quant. is still true? "It is not the case that, for ALL y, P(y) is true - however there is a y for which P(y) IS true." Damien Karras (talk) 15:31, 21 February 2008 (UTC)

- Sorry, but as I wrote, I don't know the precise rules you are allowed to use, so I am not sure what the steps are that you can take. If the domain of is not empty, and is true, then so is . The latter can be true while the former is false, but it is also possible that both are false. This is summed up in the statement that , which is true for nonempty domains. The contradiction should then follow from this. --Lambiam 20:02, 24 February 2008 (UTC)

There are no countably infinite σ-algebras

[edit]Hoping someone can help me spot the holes in my argument. ;)

Suppose is a countably infinite σ-algebra over the set X. If we can show that contains an infinite sequence of sets or , then the sequence of differences or will be a countably infinite collection of pairwise disjoint sets , and the map will be an injection from the power set of N into .

Let be partially ordered under inclusion. We may assume that contains no infinite ascending chain , for if it does this gives us what we wanted.

The set has a maximal element F1 by Zorn's lemma. It follows that the only elements of are subsets of F1 and their complements; in particular this set contains infinitely many subsets of F1. The collection of these subsets cannot contain an infinite ascending chain, so has a maximal element F2. It follows that the only elements of are subsets of F2 and their complements relative to F1. Proceeding in this way yields an infinite descending chain . — merge 16:36, 20 February 2008 (UTC)

- I think you have confused maximal element with greatest element. -- Meni Rosenfeld (talk) 19:46, 20 February 2008 (UTC)

Meni's right -- your last "it follows that" is wrong. The result is right, though. Hint: You don't need Zorn's lemma; the hypothesis that the sigma-algebra is countably infinite already gives you a bijection between the sigma-algebra and the natural numbers. Do induction along that. --Trovatore (talk) 20:08, 20 February 2008 (UTC)

- I had confused myself, although not about that--sorry! I'll definitely try what you said, but I've also tried to fix the above--any better? — merge 21:08, 20 February 2008 (UTC)

Revised version at σ-algebra redux below. — merge 13:26, 21 February 2008 (UTC)

Summing 1^1 + 2^2 +3^3 ..... + 1000^1000

[edit]I'm trying to improve my mathematics skills by doing the problems at Project Euler, but have hit a stumbling block with problem 48: (http://projecteuler.net/index.php?section=problems&id=48

I need to find the last 10 digits of 1^1 + 2^2 +3^3 ..... + 1000^1000

I've read the wikipedia page on Geometric_progression , but I want to do rather than (i.e. I don't have a constant value r)

I'm not so much looking for an answer, just what techniques or terminology I should be reading up on. Pointers welcomed!

Lordelph (talk) 16:56, 20 February 2008 (UTC)

- The key point is that you're looking for the last 10 digits, not the whole number (which is enormous). Think about which numbers contribute what to the last ten digits. --Tango (talk) 17:14, 20 February 2008 (UTC)

- I don't think there is a mathematical shortcut for this. But Project Euler is as much about programming as it is about mathematics, so you could take a brute force approach. To calculate the last 10 digits of 999^999, for example, start with 999; multiply it by 999; just keep the last 10 digits; repeat another 997 times and you are done. You only need to work with interim results of up to 13 digits, so its not even a bignum problem. I guess you end up doing around half a million integer multiplications altogether, but I don't think that will take very long. In the time it's taken me to write this, someone has probably already programmed it. Gandalf61 (talk) 17:27, 20 February 2008 (UTC)

- Actually even the ultimate brute force approach of computing the whole 3001-digit sum will be more than fast enough on a modern computer. My 2004 laptop took a negligible fraction of a second to evaluate

sum [n^n | n <- [1..1000]]in GHCi. They should've gone up to 1000000^1000000. -- BenRG (talk) 19:24, 20 February 2008 (UTC) - I've seen similar problems where there has been a shortcut, I can't spot one for this problem, though. It's a trivial programming exercise, though, so I can't see why they would have set it. I can see a few possible ways to optimise the code, but there's really no need (for example, 999^999=(990+9)^999, expand that out using the binomial expansion and each term will have a 990^n in it, which has all 0's for the last ten digits for all but the first 10 terms, so you can ignore all but those terms - probably not much quicker for 999^999 (you need to calculate the coefficients, which won't help), but could be for 999999^999999). --Tango (talk) 19:52, 20 February 2008 (UTC)

- Many thanks for pointers, problem solved Lordelph (talk) 20:37, 20 February 2008 (UTC)

- Actually even the ultimate brute force approach of computing the whole 3001-digit sum will be more than fast enough on a modern computer. My 2004 laptop took a negligible fraction of a second to evaluate

- On a slow computational platform, this can be somewhat sped up as follows. The recursive algorithms for exponentiation by squaring works in any ring, including the ring Z/nZ of modulo arithmetic. So in computing nn, all computations can be done modulo 1010. And if n is a multiple of 10, nn reduces to 0 modulo 1010, so 10% of the terms can be skipped. —Preceding unsigned comment added by Lambiam (talk • contribs) 11:13, 21 February 2008 (UTC)

Resistance

[edit]These are more questions of physics than maths but considering the overlap between the two, and how this ref desk is much more specific than the science one, I'll ask them here.

I know that the formula for the overall resistance of two resistors in parallel is . So if you have two fifty ohm resistors in parallel, the total resistance is 25 ohms. What I don't understand is how the total resistance can be less than that of either resistor; it doesn't make any sense to me.

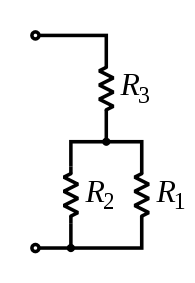

Also, if you have the below setup but R1 is just a wire, how would you calculate the total resistance of the circuit, ignoring internal resistance?

Many thanks, 92.1.70.98 (talk) 18:07, 20 February 2008 (UTC)

- It's actually very simple intuitively. Resistance measures how hard it is for electrons to move from one place to another. If there are two resistors in parallel, the electrons have 2 ways to choose from, thus travel is easier. The analogy is a mass of people trying to cross a narrow corridor - they will have a much easier time if there are two parallel corridors.

- Any resistor network can be solved using Ohm's law () and Kirchhoff's current law (the sum of currents at every point is 0). This one is simpler because it's just two resistors in a series, where the second is composed of two resistors in parallel. Thus the equivalent resistance is .

- There are many Wikipedia articles from which you can learn more about this - Resistor might be a good start. -- Meni Rosenfeld (talk) 18:35, 20 February 2008 (UTC)

- Well, Meni beat me to it. But here it is. First of all, the total resistance decreases when you are talking about two resistors in PARALLEL not in series. In series, you get exactly what you think that you should get. The total resistance is just the sum. In parallel, you can think of it like this, you have two branches, so the current going in splits up in the branch so the energy loss in each branch is smaller than what it should have been. But then the current adds up again when the branches meet. In series, the entire current has to go through both of the resistors, so the loss is greater. As for the picture, first you calculate the combined resistance of R1 ad R2 as resistors in parallel (call it R12) which gives you and then you simply treat R3 as a resistor in series with the combined resistor R12 and you get that the total resistance of the circuit is .A Real Kaiser (talk) 18:39, 20 February 2008 (UTC)

- OK the explanation for why the resistance is less in parallel is helpful but I think you both missed the bit about R1 being just a wire, not a resistor (it was the best picture I could find lying around on WIkipedia). Using the equation for resistors in parallel, it would make the resistance of the parallel section 0 (assuming that a wire has no resistance). I would interpret this as meaning that all current goes through the wire and none goes through the resistor. Is that right? Thanks 92.1.70.98 (talk) 18:45, 20 February 2008 (UTC)

- That would be an idealised situation. Nothing really has 0 resistance, so R1 would be very small, but it would be non-zero. This would mean that the total resistance over R1 and R2 was almost 0, since R2's contribution would be negligible. -mattbuck (Talk) 19:24, 20 February 2008 (UTC)

- Still, in the limit where , there will be no current going through R2 (this has nothing to do with interpretation, it's just a calculation). Assuming we want the current to go through R2, the extra wire is a short circuit. -- Meni Rosenfeld (talk) 19:32, 20 February 2008 (UTC)

- That would be an idealised situation. Nothing really has 0 resistance, so R1 would be very small, but it would be non-zero. This would mean that the total resistance over R1 and R2 was almost 0, since R2's contribution would be negligible. -mattbuck (Talk) 19:24, 20 February 2008 (UTC)

Random calculus question

[edit]A friend of mine asked me to help him solve the following question, and I'm not a math major by any means. So, any help would be appreciated. :) Zidel333 (talk) 19:40, 20 February 2008 (UTC)

- [1+1/N] ^ (N+1/2), prove that it is always increasing from zero to infinity.

- It will help to try to prove something that is true: that function is always decreasing for . Its limit at large N is e, as can be seen immediately from that article. The normal procedure is to evaluate the function's derivative and show that it is negative for all positive N. However, the algebra involved is non-trivial; perhaps some information is missing? --Tardis (talk) 20:07, 20 February 2008 (UTC)

- I'm sorry, that's all the info I have from my friend. If he tells me more, I'll add it to the discussion. Thanks for your help so far, far better than what I could have come up with. :) Zidel333 (talk) 20:12, 20 February 2008 (UTC)

- As it's "N" not "x", I would assume N is ranging only over the integers, so taking the derivative isn't necessary. You can either show that f(n+1)-f(n)<0 or f(n+1)/f(n)<1 for all n. The latter is probably easier. --Tango (talk) 22:22, 20 February 2008 (UTC)

- Hint: Perhaps rewriting the sequence as exp[(N+1/2)*log(1+1/N)] can help you (sorry, I'm not comfortable with LaTeX). --Taraborn (talk) 10:33, 21 February 2008 (UTC)

Sum

[edit]How would you work out, for j and k ranging over the integers, the sum of (j+k)^-2? Black Carrot (talk) 20:36, 20 February 2008 (UTC)

- Well, the term for j=k=0 is going to be a problem :-) --Trovatore (talk) 20:50, 20 February 2008 (UTC)

- But assuming you really meant the positive integers, the sum diverges. For a fixed j, the sum over all k>0 of (j+k)−2 is Ω(1/j). --Trovatore (talk) 20:53, 20 February 2008 (UTC)

- (Edit conflict) Assuming you mean positive integers, since all integers doesn't make sense, as Trovatore points out, observe that for a fixed j, and varying k, j+k varies over all the integers, so

- Now, the inner sum is clearly greater than 0, and summing an infinite number of non-zero constants gives you infinity (or, strictly speaking "doesn't converge"). So, unless I've missed something, the series doesn't converge. --Tango (talk) 21:00, 20 February 2008 (UTC)

- Correction: For fixed j, varying k, j+k varies over all integers greater than j, I was thinking of k varying over all integers, rather than just positive ones... it doesn't change the conclusion, though - the series still diverges. --Tango (talk) 22:19, 20 February 2008 (UTC)

- The statement that "summing an infinite number of non-zero constants gives you infinity" is very often not true, even assuming you're limiting yourself to positive constants. For example, . See the Taylor series for ex or tan x as a couple other examples. MrRedact (talk) 01:50, 21 February 2008 (UTC)

- None of those examples are constants. --Tango (talk) 12:08, 21 February 2008 (UTC)

- I guess it depends on what you mean by a "constant". My first example is the sum of 1/2, 1/4, 1/8, 1/16, 1/32, etc., all of which are positive constants, and whose sum is finite (1). I see now that you're talking about an infinite sum of identical positive terms, i.e., an infinite sum of terms that don't depend on the index of summation, in which case, yeah, that would always diverge. MrRedact (talk) 17:35, 21 February 2008 (UTC)

- Yeah, I should probably have said "an infinite number of copies of a non-zero constant". I'm pretty sure my conclusion was correct, I just didn't explain to too well, sorry! --Tango (talk) 18:42, 21 February 2008 (UTC)

- I guess it depends on what you mean by a "constant". My first example is the sum of 1/2, 1/4, 1/8, 1/16, 1/32, etc., all of which are positive constants, and whose sum is finite (1). I see now that you're talking about an infinite sum of identical positive terms, i.e., an infinite sum of terms that don't depend on the index of summation, in which case, yeah, that would always diverge. MrRedact (talk) 17:35, 21 February 2008 (UTC)

- None of those examples are constants. --Tango (talk) 12:08, 21 February 2008 (UTC)

- The statement that "summing an infinite number of non-zero constants gives you infinity" is very often not true, even assuming you're limiting yourself to positive constants. For example, . See the Taylor series for ex or tan x as a couple other examples. MrRedact (talk) 01:50, 21 February 2008 (UTC)

- Here's another way to do it. Let i=j+k. Now, you have 1 case where i=2 (j=1, k=1); 2 cases where i=3 (j=1, k=2; and j=2, k=1), and in general i-1 cases for a given i. Thus we can express the desired sum as , which is equal to . The first sum diverges and the second converges, so the overall sum diverges. Chuck (talk) 18:53, 21 February 2008 (UTC)

Integral Question

[edit]Hi I've got an integral question which i have the answer for but i don't understand why it works.

The question is:

If f(x) is continuous on [0; ¼], show that:

I've got it into this form:

The next step i have written is:

But i don't understand how to get to that step. It doesnt help that my lecturer makes the odd mistake now and again.

Can somebody explain how to get between these steps and how to complete the rest of the question. Thanks for any help.

212.140.139.225 (talk) 23:00, 20 February 2008 (UTC)

- In the one-but-last formula, write f(x) instead of just f. I think the last formula should be something like

- which is justified by the first mean value theorem for integration. --Lambiam 11:39, 21 February 2008 (UTC)

![{\displaystyle \neg \exists x[((Q(x)\land R(x))\lor (Q(x)\land \exists yP(y))]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/29b0c1d3f2880329983f89603ac989daa8c4ff95)

![{\displaystyle \exists x[((Q(x)\land R(x))\lor (Q(x)\land \forall yP(y))]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c41d56a0a21ae87e77920e9a5bc371c6ce0f69a0)

![{\displaystyle \exists x[((Q(x)\land R(x))\lor (Q(x)\land \forall yP(y))]\quad \rightarrow \quad \exists x[((Q(x)\land R(x))\lor (Q(x)\land \exists yP(y))]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9775576b1711d6a6563118b01fd451ede19af0db)