Talk:Turing machine/Archive 3

| This is an archive of past discussions. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 | Archive 2 | Archive 3 |

2nd note does not help explain the word 'innings'

Title. Did Wiktionary previously give a definition relevant to the quote?

I don't like the picture

Hello, I don't like the picture on the front page (artistic representation of Turing machine). In my opinion, Turing machine is a very simple concept, and any picture of it should capture the simplicity. Although the current picture is nice, it has lot of irrelevant details, which make a bad impression that Turing machine is some complex device, while it is not. I liked [1] much more for this page, although not perfect either - it uses rather dull shades of grey as symbols, and doesn't capture the notion of internal state.

- I agree; the "artistic" picture seems completely irrelevant. I'll remove it. --Brouhaha 21:07, 19 May 2007 (UTC)

- From the history, I see that an earlier, more relevant image was replaced by User:Alain Vey on May 16, with an image he apparently created, and the edit summary "(fix)". The misleading edit summary seems to me to be an indication that Vey was not acting in good faith, but rather trying to surreptitiously promote his own artwork. --Brouhaha 21:15, 19 May 2007 (UTC)

(Once again) I would suggest that authors who feel the need to add their "artistic" rendition to the article (this is the third of three "artistic" versions that I know of -- two with colors, this last one the most extravagant), submit them on the Turing machine gallery sub-article page where they can all coexist happily. As for a "best image?" Of all the images I've seen the "Poor Mug in a Box" is the simplest and most vivid but fails (in the 1974 original) because (as the correspondent noted above) it fails to show "the mug" holding a list of instructions -- it failed to show both "the poor mug" and "the mug" holding the instructions (!):

- "We are not concerned with the mechanics or the electronics of the matter. Perhaps the simplest way to picture the thing is quite crudely: Inside the box there is a man, who does all the reading and writing and erasing and moving. (The box has no bottom: the poor mug just walks along between the ties, pulling the box with him.) The man has a list of m instructions written down on a piece of paper. He is in state qi when he is carrying out instruction number i." (Boolos and Jeffrey, 1974).

But the image also fails because the Turing machine is a machine -- a mechanism acting as if it were a man doing very simple things -- but in fact it is not a man, whereas Emil Post's image is truly of a man working in a series of boxes:

- "The worker is assumed to be capable of performing the following primitive acts:

- "(a) Marking the [paper inside the] box he is in (assumed empty)

- "(b) Erasing the mark in the box he is in (assumed marked),

- "(c) Moving to the box on his right,

- "(d) Moving to the box on his left,

- "(e) Determining whether the box he is in, is or is not marked.

- The set of directions... [etc. etc.] (Emil Post (1936))

As noted in the "gallery" sub-article, computational machinery of the Turing-machine sort became practical only with the invention of the electromechanical relay (probably coupled with something like Hollerith punch-cards to act as the "Table of Instructions" . . . both rather visually-boring objects. The artistic challenge is to render elegantly (i.e. simply, accurately, interestingly, engagingly) this visual concept of machine following a table of instructions to print and erase symbols on an unbounded-length of paper tape (or an equivalent). wvbaileyWvbailey 19:36, 20 May 2007 (UTC)

Disproof of Universal Computing

I've proposed reverting the most recent change by Ewakened, because it seems like original research by one person being declared as The Truth. That is, this Selim Akl says that's the case, so universal computing is declared a "myth." I may be misunderstanding, but that claim if true is a refutation of the basic point of the article! So, it's like someone finding evidence that water is actually dry, and the page on water suddenly including a section on "the myth of wetness." Can we show that Akl's opinion is mainstream, or a minority view worth being included for its influence (like Creationism)? Otherwise it should be toned down to present Akl among "alternative views" or something rather than outright refutation. -Kris Schnee 19:07, 29 May 2007 (UTC)

- Akl uses nonstandard definitions, so his results don't actually reflect on what is ordinarily meant by "universal machine" . Removing the claims here was probably the right thing to do, since I can't see how to rephrase them to make them relevant (Akl's use of words like "computable function" are not at all the same). There is probably a place at WP where AKL's research would fit in, perhaps under "computable function" somewhere, but certainly not here. CMummert · talk 23:26, 29 May 2007 (UTC)

- Maybe under Church-Turing thesis? This deals with the concept that no single logical system can completely and accurately prove all true statements. -Kris Schnee 23:43, 29 May 2007 (UTC)

More history here?

(Response to comment in maths rating.)

Hi, wvbailey here. Over the past year I've pruned this article into subarticles . . . Most of the history can be found at algorithm. The issues are organizational: (i) (potentially) too-long article, (ii) that the two histories -- algorithm, Turing machine -- are pretty much the same. Would you suggest we create a new subarticle? If so, title? I've debated doing this to the algorithm history section, but I have felt reluctant because (as you suggest) the "read" is better with the history embedded in the main article, and reviewers at algorithm wanted more history. wvbaileyWvbailey 14:45, 21 May 2007 (UTC)

- I agree with you that the read is better with the history embedded in the main article, both here and at algorithm. I'm surprised you consider the histories to be pretty much the same. I suppose the history and motivation for Turing machines could be considered a part of the history of algorithms, but it is surely only a part, since it concerns the theory of computation (or Computability theory), rather than, for example, the development of algorithms, specific algorithms and applications of algorithms. I therefore wonder whether there is scope for shortening the part of the algorithm history dealing with the development of theory and models of computation, and expanding on these aspects here and in other theory of computation articles. Geometry guy 12:54, 9 June 2007 (UTC)

- You have a good point. I'm sort of thinking out loud in the following: With respect to "theory of algorithm", I have little information after ~1950's. Turing died in the early fifties, Gödel was slowly disintegrating, Kleene finished his Introduction to Metamathematics, Martin Davis and Kleene expressed in a simpler form Turing's Halting Problem, thereafter much theoretical work moved toward "mechanical computers" and "computability theory" aka computer science. An interesting article -- Logic -- in Encyclopedia Britannica remarks that not too much work in Logic is going on in the US anymore because the real knotty problems are thought to have been "solved", and logicians have a hard time placing themselves in universities. Something like this may have happened w.r.t. "algorithm" (theory of, as opposed to tons of work on instances of such as "greedy algorithms"), and "Turing machine" too, especially after the evolution of the Turing machine into the more user-friendly, computer-like counter machine and register machine models in the 1950's and 1960's.

- Markov's monograph is one post-WWII approach (see algorithm characterizations). And Knuth's three-volume set (he's working slowly on a fourth) is important because it was an attempt to codify common algorithms -- I've used his books myself. I corresponded with Gurevich (see algorithm characterizations), about a "text" or other reference having to do with "history of, and theory of, algorithm", and he said he did know of any. Apparently he and just a few others are working in this field (he's a theorist at Microsoft). He pointed me to his papers, which I dutifully read. In one I ran into Robin Gandy's "Theory of Mechanism" and this I hunted down and read (tough read), but this has more to do with limitations of Turing machines and computability theory and the Turing- and Church-theses. Why "algorithm" (theory of) and "Turing machine" are so mixed together is that (I believe) most theorists now consider the Turing machine (or a Turing-equivalent model such register- or counter-machine) as the sine qua non, the ultimate platform, on which (an instance of) "algorithm" is mounted (or must be mountable ultimately). However, some (e.g. Gurevich) believe that the combined machine+instructions is indeed "algorithm", the two cannot be separated. Knuth's specification of his MIX-computer pseudo-code, and his writing of his algorithms in his code, is a (tacit) admission of this. Also Stone (see algorithm characterizations). So in some minds "algorithm = Turing machine+instructions" and in others "algorithm = Turing machine instructions" (see Gödel's characterization) and in others "algorithm" = Turing-equivalent assembly-language and/or pseudo-code + instructions written in same." To confuse the issue, Gurevich mumbles something about "communication with an external world". And this leads into an interesting philosophic thread (ontology) -- someone added the philosopher Daniel Dennett's take on algorithm characterizations w.r.t. "evolution" (i.e. evolution as an algorithm !), and I have been mulling whether to add Searle's take too (the Chinese room question -- syntax versus meaning -- where is the "meaner" and what role does "the meaner" play in "algorithm" (theory of)).

- I am seeing in the above a fork in the road -- "History of the "Theory of algorithm"" versus "History of (instances of) algorithms." Originally a lot of the history was at Halting Problem, I pruned it and amplified it for algorithm. Turing conceived of his "machine" (i.e. mechanical model of a man working in a mindless, machine-like manner) as a route to solve a problem posed by Hilbert (the Entscheidungsproblem). That certainly needs to be added here at Turing machine. Ditto for Emil Post's expression of the same. I'll reread the pertainent section of Turing's biography to get some guidance, also Dawson's chapter III Excursus in Logical Dilemmas: The Life and wrok of Kurt Godel. Hopefully you and other correspondents have more ideas and takes on this. wvbaileyWvbailey

A stab at history

moved to main article page: wvbaileyWvbailey 19:54, 23 June 2007 (UTC)

Models equivalent to the Turing machine model

The parenthetical remark

(Interestingly, this seemingly minor relaxation enables the Turing machine to perform such a wide variety of computations that it can serve as a model for the computational capabilities of all modern computer software.)

Um, yes? First, and the more minor nitpick, it's not the Turing machine that is being relaxed, but the push-down automaton. Second, does it actually add anything to what is already a common motif throughout the article (from the very first sentence, in fact)? —Preceding unsigned comment added by 60.234.168.92 (talk) 20:05, 17 October 2007 (UTC)

A paradoxical situation

To keep discussion together, I'll remove this duplicate section and point to the discussion on this exact topic at Talk:Algorithm#A_paradoxical_situation. — Carl (CBM · talk) 20:37, 19 March 2008 (UTC)

Introduction

Hi all! I find the sentence stating that Turing machines "were not actually constructed" questionable. Indeed, there are no Turing machines constructed for practical problem solving I am aware of, however, there are numerous software simulators of Turing machines, written in virtually all Turing-complete languages. Additionally, hardware implementations of Turing machines do exist (many of them developed for educational purposes), for example, http://portal.acm.org/citation.cfm?id=569940, Another, less important issue that bothers me is the plural used in the introduction. Could someone please tell me why are "Turing machines" used against the term "Turing machine"? The singular sounds like a name of a class of the machines, and to me seems to be more in an encyclopaedic style. --Nebul4 (talk) 04:39, 22 June 2008 (UTC)

- There is no physical machine that computes Graham's number (GN) in unary (just to take an example). The point is that no physical machine implements unbounded space & time resources — so none are truly capable of simulating arbitrary Turing machines, which are abstract mathematical objects. The following scenario occurs only in fiction:

- In the midst of a computation that will eventually halt after several GN's of instruction cycles, a message appears ...

- "The computation has been suspended due to insufficient memory. To resume the computation, insert an additional GN-bit memory card into the storage device." ;o))))

- r.e.s. (talk) 20:20, 22 June 2008 (UTC)

- Yeah, that is undoubtedly an interesting argument :) !

- However, as concluded in the article too, the approach to model computers as finite automatons has proven to be "unproductive. The number of states involved is so large, and the limits so unclear that you don't draw any useful conclusions"(quote from Hopcroft-Motwani-Ullmans book). In my very modest opinion, I agree with the above judgement.

- Don't get me wrong, I like the clarity and purity of mathematical definitions very much. I am trying to elaborate why I am not fully satisfied with the introduction. If there is a consensus that strict definitions are to be followed throughout the article, it would be more precise to replace thus they were not actually constructed. into . They cannot be actually constructed without introducing finiteness limitation to the abstract model. Another question that may come up is a question of Alan Turing's intentions. I am sure that he did not plan to build machines with infinitely long tracks. I think it is false to say that Turing had ideas to make machines inherently impossible to construct.

- I believe that it would be the best to remove the sentence that they were not constructed from the introduction, and perhaps write a small section named for example Simulators of Turing machines.

--83.131.79.38 (talk) 01:48, 23 June 2008 (UTC)

Explaining CPU

I find it very easy to doubt that a T.M. "is particularly useful in explaining the functions of a CPU inside a computer."

A stab at philosophic implications

-- work in progress -- wvbaileyWvbailey 19:45, 25 June 2007 (UTC)

> Philosophic issues around the Cantor diagonal method [??]

> Turing's paper that prescribes his Turing Test:

- Turing, A.M. (1950) "Computing Machinery and Intelligence" Mind, 59, 433-460. At http://www.loebner.net/Prizef/TuringArticle.html

"Can machines think?" Turing asks. In §6 he discusses 9 objections, then in his §7 admits he has " no convincing arguments of a positive nature to support my views." He supposes that an introduction of randomness in a learning machine. His "Contrary Views on the Main Question":

- (1) the Theological objection

- (2) The "Heads in the Sand" Objection

- (3) the Mathematical Objection

- (4) The Argument from Consciousness

- (5) Arguments from Various Disabilities

- (6) Lady Lovelace's Objection

- (7) Argument from Continuity in the Nervous System [i.e. it is not a discrete-state machine]

- (8) The Argument from Informality of Behavior

- (9) The Argument from Extrasensory Perception [apparently Turing believed that "the statistical evidence, at least for telepathy, is overwhelming"] —Preceding unsigned comment added by Wvbailey (talk • contribs) 02:10, 2 October 2007 (UTC)

> "Foundations issues": foundations as philosophy [??] What is "calculable", How to define "effectively calculable", "number" etc. -- analog analysis versus digital computation. Philosophic notion of "intuitive" as used by the authors:

- "† We shall use the expression "computable function" to mean a function caculable by a machine, and we let "effectively calculable" refer to the intuitive idea without particular identification with any one of these definitions." (Turing (1939) footnote in U p. 160)

Sieg (2002) states in his Calculations by Man and Machine: Conceptual Analysis that

- "My objective is to resolve central foundational problems in logic and cognitive science that require a deeper understanding of the nature of calculations. . . . The claim for logic is almost trivial and implies the claim for cognitive science; after all, the relevant logical notions have been used when stiving to create artificial intelligence or to model mental processes in humans.

- "The foundation problems problems come to the fore in arguments for Church's or Turing's Thesis, asserting that an informal notion of effective calculability is captured fully by a particular precise mathematical concept." (p. 390)

Gandy in particular has a section 10.5 Philosophical Significance of Turing's Analysis with 3 major bullet points:

- (1) Analysis of what was vague and intuitive "stated with complete precision" (p. 80 -- also Godel's quote has this "kind of miracle" that "the diagonal procedure does not lead outside the defined notion" (Gandy quoting Godel p. 80)

- (2) "Turing's analysis can be seen as part of the general movement, already in evidence in the seventeenth centruy, towards a mechanistic -- or physicalistic -- account of human thought and behavior." (p. 80)

- (3) In contrast with Wittgenstein's question "how it is that a rule has been followed correctly when different applications of it are made to different cases", "Turing's analysis shows how following such a rule consists in the iteration of one of a fixed number of behavior patterns: to follow a rule is to repeatedly carry out a recipe... it does show that the use of rules with potentially infinitely many cases of application is just a rhetorical deive, which does not really raise a new sort of problem."

> Strong AI, weak AI problem of mind and free will versus rote machine (determinism vs non-determinism) (Hodges pp. 289-293)

- Gödel's Platonism

- "Note the question of whether there exist finite non-mechanical procedures not equivalent to any algorithm, has nothing whatwoever to do with the adequacy of the definition of "formal system" and of "mechanical procedure" (Gödel (1964) U p. 72)

> non-deterministic TM (posed by Turing as a c-machine, but not carried further except a footnote on p. 138 that simply says that if the choices are binary 0 and 1 to just successively ("using a systematic search" is Gandy's phrase) visit all the possible choices). Also: o-machine of 1939 [??]. See commentary Gandy (1988) p. 78

> Turing's analysis of a human computer:

- Symbols to appear on discrete locations in space: "Computing is normally done by writing certain symbols on paper . . . divided into squares like a child's arithmetic book. . . . the two-diemnsional character of paper is no essential of computation.

- Linear symbols or strings: "I assume then that the computation is carried out on one-dimensional paper, i.e. on a tape divided into squares" (U p. 135)

- Finite strings of B symbols: "I shall also suppose that the number of symbols which may be printed is finite. . . It is always possible to use sequences of symbols in the place of single symbols." U p. 135. (Turing allows a number B of symbols to be observed at once, p. 127 )

- Short symbol strings i.e. "immediate recognisability": "the compound symbols, if they are too lengthy, cannot be observed at one glance [he gives an example here of two long strings of 9's]"p. 136

- Finite states of mind: "If we admitted an infinity of states of mind, some of them will be "arbitrarily close" and will be confused.

- Simple steps so elementary: "Let us imagine the operations performed by the computer to be split up into 'simple operations' which are so elementary that it is not easay to imagine them further devided. . . in a simple operation not more than one symbol is altered. . . the squares whose symbols are changed are always 'observed' squares . . . each of the new observed squares is within L squres of an immediately previously observed squares." (p. 136)

- ?: "the squares can be found by some process of which my type of machine is capable." (p. 137)

- Deterministic part I:"The operation actually performed is determined . . . by the state of mind of the computer and the observed symbols. In particular they determine the state of mind of the computer after the operation is carried out."

- Deterministic II: the notion of "State formula": The notion of "State of mind" can be removed by a 'note of instructions' and appended to the symbols on the tape: ". . .he must leave a note of instructions (written in some standard form) explaining how the work is to be continued. . . . the note of instructions must enable him to carry out one step and write the next note. . . the state formula at any given staage is determined by the state formula before the last step was made, and we assume that . . . there is an axiom U which expresses the rules governing the behavior of the computer, in terms of the relation of the state formula at any stage to the state formula at the preceding stage."

Sieg (2002) reduces these [restrictions] to the following:

- ("B) (Boundedness) There is a fixed bound on the number of configurations that a computor can immediately recognize

- "(L) (Locality) A computor can change ony immediately recgonizable (sub-) configurations

- "(D) (Determinacy) The immediately recognizable (sub-) configuration determines uniquely the next computation step (and id)" (p. 396)

> Gandy (1988)'s complaint: he believes that Turing based his model (philosophically, fundamentally, "intuitively") on the modelled-behavior of a human computer doing a calculation, rather than on the (logically-)possible behaviors of a computational machine in space-time.

- "Turing's analysis makes no reference whatsoever to calculating machines. Turing machines as a result, as a codifcation, of his analysis of calculation by humans. 30 It is true that Turing ws fascinated by machines and mechanical devices . . . but he evidently did not think tht ideas derived from actual calculating machines were sufficiently relevant . . . it is by no means obvious that the limitations described in Section 10.2 [?? ] apply to mechanical devices; Turing does not claim this." ( p. 77)

- Gandy wonders what "finite means" means -- what limits are placed on computation? (p. 78)

--- end philosophy ---

- I believe the Can computers think? discussion is off-topic for this article; it is not about Turing machines, but about comparing computing machines with the human mind in general. Rp (talk) 16:13, 9 July 2008 (UTC)

The "state"

This section seems overly verbose, and a little confusing. Specifically, it suggests that a full configuration may include arbitrary infinite tape contents, which is not the case. I don't know to what extent readers are actually helped by the section as it is now, so I hesitate to rewrite it. Rp (talk) 16:27, 9 July 2008 (UTC)

- The tape is part of the machine. So, the machine's total state or the machine's configuration necessarily includes the contents of the tape. wvbaileyWvbailey (talk) 17:19, 9 July 2008 (UTC)

- Won't it be true, though, that the tape only contains a finite number of nonblank squares at any particular moment? This is key to the arithmetization of Turing machines in Kleene's T predicate - in order to store the entire state in a single natural number, the state itself has to be finite. — Carl (CBM · talk) 17:48, 9 July 2008 (UTC)

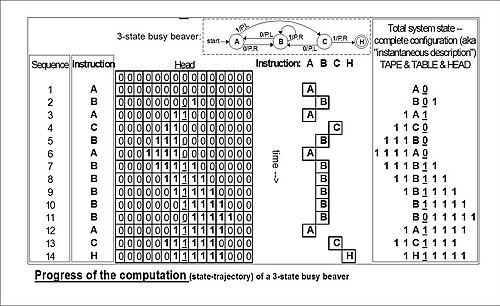

- Yes, as I recall a while back you and I batted around this point and I came to agree that while the tape is arbitrarily and "infinitely" extensible it is necessarily finite at any time. Thus at any instant the tape contents + the machine's configuration (e.g. the "address" of the current instruction together with both the tape head's location and the entire non-empty listing of symbols on the tape) can be represented by a number (maybe a very large number), but a number none the less. Actually this is what this (cautionary) section is stating and even gives an example of how Kleene symbolized this notion (with symbols q4 designating the current instruction) written over the actual tape square currenty being examined and/or changed). (Some authors put this instruction and/or instruction number to the left or the right of the currently-scanned square) . So the machine's total state might be written 1A1 whereas an incautious reader might (wrongly) think of the machine's total state is just "A" (the instruction "A", or more accurately, the "address" of the instruction that happens to be "A"). BillWvbailey (talk) 18:29, 9 July 2008 (UTC)

- The tape is not part of the machine; making it part of the machine would invalidate the comparison with the human computer, who uses external memory, and incorrectly suggests that only a bounded amount of memory is available, which would miss the whole point of the Turing machine. Rp (talk) 16:46, 21 July 2008 (UTC)

- I've gone back to look at the original paper. My sense is that Turing is somewhat ambiguous in his definition of what one of his a-machines really is, and notion of "machine" was somewhat flexible, as required by his proofs. There are at least three definitions.

- 1. At first, he certainly gives the impression that "the machine" is separate from "the tape"; initially, "the tape" is supplied to "the machine" with or without symbols on it. In modern terms, this is a finite state machine equipped with a "tape head".

- 2. But, as Quote 2 below shows, he goes a step further and pulls the "state of mind" out of the machine as well, leaving only the instructions and some primitive operations as "the machine" (i.e. a zombie man-aka-computer with no memory at all) to be supplied with scrap paper and pencil to record the "m-configuration" (state of mind). In modern terms then, this "machine" is not even a finite state machine + "tape head"; it is merely a ROM (Table of instructions) plus some random logic (a clock, some logic gates to a couple timing phases) plus a tape head; what is normally called "the state register" and "the tape" are external to this "machine".

- 3. However, this notion of finite state machine without tape falls apart in the case of the universal machine U. Now the "program" P (to be "exectued" by the finite state instructions of U) must be presented to U as a string of symbols on the tape in proper format. If all goes well the "program" P plus U, can do the computations of "target" machine M. "It is possible to invent a single machine which can be used to compute any computable sequence. If this machine U is supplied with a tape on the beginning of which is written the S.D of some computing machine M, then U will compute the same sequence as M.(p. 128). I interpret this to mean that U plus a piece of tape with (properly-formatted) symbols on it is equivalent to M. Thus at least sometimes, a Turing machine such as M = U+P will have to include some tape.

- In any case, he defines the complete configuration and "state of progress of the computation" (state formula) precisely:

- "At any stage of the motion of the machine, the number of the scanned square, the complete sequence of all symbols on the tape, and the m-configuration will be said to describe the complete configuration at that stage. The changes of the machine and tape between successive complete configurations will be called the moves of the machine." (Turing 1936/7 in The Undecidable p. 118)

- Quote #2: "We suppose, as in [section 9.] I, that the computation is carried out on a tape; but we avoid introducting the "state of mind" by considering a more physical and definite counterpart of it. It is always possible for the computer to break off from his work, to go away and forget all about it, and later to come back and go on with it. If he does this he must leave a note of instructions (written in some standard form) explaining how the work is to be continued. This note is the counterpart of the "state of mind"....the state of progress of the computation at any stage is completely determined by the note of instructions and the symbols on the tape. That is, the state of the system may be described by a single expression (sequence of symbols), consisting of the symbols on the tape followed by Δ (which we suppose not to appear elsewhere) and then by the note of instructions. This expression may be called the "state formula". We know that the state formula at any given stage is determined by the state formula before the last step was made, and we assume that the relation of these two formulae is expressible in the functional calculus...." (Turing 1936/7 in The Undecidable p. 139).

- Turing was a very young man when he wrote his paper (hell, it was his Master's Thesis, his PhD thesis was his "Ordinals" paper). As Martin Davis prefaced the paper "This is a brilliant paper, but the reader should be warned that many of the technical details are incorrect as given.... In any case, it may well be found most instructive to read this paper for its general sweep, ignoring the petty technical details. (In an up-to-date treatment thes3e ewould be handled quite differently anyhow.) (The Undecidable p. 115). Bill Wvbailey (talk) 19:10, 21 July 2008 (UTC)

There are lots of interesting historical details connected with Turing and his original paper, but, in my opinion, this article should focus mainly on the modern usage of the term "Turing machine". In this regard, there is is really no ambiguity -- look at the formal definition as given in the article. The tape is not part of the *Turing machine* per se, but is most certainly part of the configuration of the *overall system*. It's the latter that's necessary in discussions of computation, which involves the step-by-step evolution of the overall configuration. It's a waste of time to get too bogged down in the historical twists & turns.

r.e.s. (talk) 19:29, 21 July 2008 (UTC)

Basically, except for the definition of the U-machine, I agree with R.e.s. I don't see what difference having the tape as an arbitrarily extensible part of the machine (e.g. an operator stands by to add more tape if necessary -- see the drawing in Penrose's The Emporer's New Mind) or the tape as something added to the machine and external to it. As I recall (fuzzily) both models are au courant in the literature. With regards to non-Turing models of computation e.g. counter machines, you are provided with an unlimited supply of pebbles and either dig the holes deeper or add more holes or both, as required by the computation. (I'm familiar with Gandy et. al.'s philosophic issues surrounding computer vs computor. I don't see the substantive difference in thought-models of (i) a human computor with limited memory but augmented by paper & pencil, versus (ii) a human computor with no memory whatever but likewise augmented by paper & pencil; the latter is just an extreme case of the former). Bill Wvbailey (talk) 21:37, 21 July 2008 (UTC)

- A Universal Turing Machine is no different in this regard. The tape is not part of the UTM, or any other TM. For example, when one speaks of an enumeration of TMs, which certainly includes UTMs, the tape contents are not involved. In modern usage (at least), a TM corresponds roughly to a program, not to a program plus input.

r.e.s. (talk) 22:37, 21 July 2008 (UTC)

Back to this issue of the tape as part of the machine: The following is quoted from the book:

- David Berlinski, 2000, The Advent of the Algorithm, Harcourt, Inc., San Diego.

- "The blueprint for a Turing machine is both architectural and procedural. The architecture describes the machine's four parts, and these are common to all Turing machines; the procedures comprise its instructions, and although they are all written in the same code and obey the same format, they vary from machine to machine.

- "Architecture

- "An infinitely long tape: The tape is dived into squares and strethces in two directions . . ..

- "A finite set of symbols: [etc]

- "A reading head: [etc]

- "A finite set of states: [etc]

- "Procedures" [etc] (p. 185-186)

BillWvbailey (talk) 22:10, 6 September 2008 (UTC)

- I haven't been following this closely, so I'm not sure what argument is being made here. Whether the tape itself is part of the machine or not is going to vary from one author to another. Moreover, some authors will claim the tape is infinite, while other will only say indefinitely extendible; and some will say one-sided and some will say two-sided. What is certainly true is that the contents of the tape are not part of the Turing machine program, and that an enumeration of Turing machines will only enumerate those programs. Moreover, there are two "states" of a Turing machine. The Turing machine program itself consists of a finite number of states, and these do not depend in any way on the tape contents. On the other hand, the so-called instantaneous description of a computation includes both the developing contents of the tape and the sequence of program states that the machine has visited during the computation. — Carl (CBM · talk) 23:10, 6 September 2008 (UTC)

- To clarify the issue: I've bold-faced my assertion (now supported with the Berlinski reference) versus the other editors' assertions that "the tape is not part of the machine." At this point, their assertion is bullshit -- unsupported with references -- and therefore requires the [citation needed] tag. With the exception of your contention that some authors state that the tape is not part of the machine (I want proof in the form of references), I agree with what you wrote above in particular about the two kinds of "states" -- that was the point of my long screed above and the particular section of the article that discusses this distinction. Bill Wvbailey (talk) 15:26, 7 September 2008 (UTC)

- Bill, since you asked, I pulled a few books off my shelf.

- Rogers (1967), one of the more well regarded texts in recursion theory, does not consider the tape to be part of the machine. Here are some quotes from p. 13 to p. 14, with my parenthetical comments.

- "Consider a finite mechanical device which has associated with it a paper tape..." (so the tape is not part of the device)

- "At the conclusion of any step, the device itself (as distinct from the tape) takes on one of a fixed finite set of possible internal (mechanical) configurations. We refer to these internal configurations as internal states."

- "Given a Turing machine, a tape, a cell on that tape, and an initial internal state..." (Rogers would not specify the tape separately if it were part of the machine)

- Rogers (1967), one of the more well regarded texts in recursion theory, does not consider the tape to be part of the machine. Here are some quotes from p. 13 to p. 14, with my parenthetical comments.

- Mendelson (1979, p. 241) says: "If a tape is put into a Turing machine and the reading head is placed on a certain square, then the machine begins to operate on the tape: printing and erasing symbols and moving from one sqaure to an adjacent one. If the machine ever stops, the resulting tape is said to be the output of the machine applied to the given tape." For Mendelson's purposes, the tape is not part of the machine, it is the raw material that the machine uses to operate.

- Interesting. This issue (or the confusion therein) is probably worth mentioning in the article. Here's the technical rub: given no tape the tapeless machine, when encountering a "branch" (test-and-jump) instruction will be forced to yield "u" for "unknown". Thus this tapeless architecture is not a Turing machine. It only becomes a Turing machine when (at the least) a blank tape is installed, and a test on a square yields at least "blank". (I.e. a decision "blank square" (logic 0 for example) is not synonymous with "empty: tapeless, no decision can be rendered"). I think where the confusion lies is in the distinction between a physical object called "the blank tape" and the blank symbols on this physical tape. In a kind of set-theory analogy: The tapeless machine's tapelessness represents nullity, as opposed to an empty set. Another example from consciousness theory: the machine-with-tape represents a (possibly-blank) brain, and the symbols represent the "ghost in the machine". Bill Wvbailey (talk) 22:14, 7 September 2008 (UTC)

- I don't think there's any reason to mention this in the article. The semantics of the machine are such that it is never executed without a tape installed (just like it makes no sense to run a press brake without a piece of metal in it). The representation of a Turing machine as a set of quadruples or quintuples also implicitly separates the machine from the tape, as there is nothing in that representation to stand for the actual tape contents. — Carl (CBM · talk) 01:29, 8 September 2008 (UTC)

- Not in the definition of the machine itself, but in the definition of its behavior, which defines it to act on a finite string of symbols (of variable size). I.e. if you're going to imaging an infinite tape it's going to have to be blank on all but a finite sequence of cells. The reason for explaining this in the article is that the "infinite tape part of the machine" meme has caused a lot of confusion in the past, and continues to do so, see e.g. Talk::Declarative programming or a thread in comp.theory. —Preceding unsigned comment added by Rp (talk • contribs) 11:40, 13 September 2008 (UTC)

References

This article was started with parenthetical referencing (e.g. this revision). At some point, someone renamed the references "further reading" and added footnotes [2]. I am going to go through and reformat everything back to parenthetical referencing. It's completely inaccurate to call the references "further reading" when they are actually referenced in the article text. The whole thing is probably due to someone who added footnotes in good faith, not realizing that a different style was being employed. — Carl (CBM · talk) 14:07, 13 September 2009 (UTC)

- OK, I did that. I split up the references somewhat arbitrarily into categories (primary literature, computability texts, etc.). That division may need to be tweaked some, but I think it is more helpful to readers than a single list of dozens of references. — Carl (CBM · talk) 14:32, 13 September 2009 (UTC)

What does "innings" mean?

What does the word "innings" mean in the context of the following quote?

- Any symbol on the tape may therefore eventually have an innings.

I have never heard the word used outside of baseball, and in that context it is plural. The article "an" implies it is singular here. I tried looking it up on Wiktionary but there is no definition matching this use of the word. Perhaps we need to add a rare or antiquated definition of the word to Wiktionary. Then, this article should link to that definition at the point where the word is used. —Voidxor (talk) 03:13, 12 September 2009 (UTC)

- I wondered the same thing. Rather than use a regional-English slang (or whatever is going on here) I suggest the author of this should find an American-English word to replace it. Either that or we'll have to fix the problem ourselves. Bill Wvbailey (talk) 14:09, 12 September 2009 (UTC)

- I'll bet it is a term from English cricket meaning "come to bat" . . . any symbol can be "put into play" beneath the head. I put a Clarify tag on it. Bill Wvbailey (talk) 14:23, 12 September 2009 (UTC)

- This was my sense of the word as well when I first entered the quotation. Just double checked the OED, and the term is used in an extended sense outside of Cricket, always used in the plural in Great Britain, for a turn of any kind. Added the def. to Wiktionary [3] and footnoted the word. Is this format okay? --Gwythoff (talk) 19:14, 12 September 2009 (UTC)

Turing machines and the human brain

I reverted a change that stated an equivalence between what Turing machines can do and what the human brain can do. The relationship between Turing machines and the mind is complex, with several viewpoints in the literature I believe. The text that was added implied it is an accepted fact that the brain and Turing machines are equivalent, which I cannot believe is correct. Also, while I don't mind the idea of a discussion somewhere about the relationship between Turing machines and the human mind, I don't think it really belongs here. Wherever it goes, it will need to be more carefully researched and referenced. — Carl (CBM · talk) 15:10, 22 April 2008 (UTC)

- Yes, while it's clear that a human can simulate a Turing machine (putting aside mortality, etc) I imagine there are some strong opinions about whether human brains can be simulated by Turing machines. People who believe in strong AI like me would tend to say "yes" while those who don't would tend to say "no" or "only a Turing machine so large as to be impractical to construct". Still others would say "yes but it's a poor model that fails to capture the essential high-level structure of the human brain." Ideally this would all go in some article discussing the philosophy of the man/machine divide, and we could link to it from here. Dcoetzee 17:03, 22 April 2008 (UTC)

- I agree with all of the above. The jury of philosphers of mind is very much still in the jury room, debating this (in particular: Dennett versus Searle). Bill Wvbailey (talk) 20:11, 22 April 2008 (UTC)

- Yes, it's tough to say. More research is definitely required. Also, Dcoetzee, I don't believe that morality precludes this relationship. Morality can be accounted for and explained in terms of a the way a Turing machine and the brain could be alike. But more research on behalf of Wiki-editors is needed before it can be mentioned in the article with any credibility. ask123 (talk) 06:41, 26 November 2008 (UTC)

- Dcoetzee said "mortality," not morality 128.194.39.250 (talk) 05:09, 3 March 2009 (UTC)

This is best discussed in the article on Church-Turing thesis because it's one of the variations. There's already a bit of discussion [[ there. Once that's developed some more, a pointer from here couldn't hurt. Pcap ping 01:30, 17 September 2009 (UTC)

Small UTMs, Wolfram stuff

I've cut down some of the WP:UNDUE weight given to those topics. Still there's more written about here than the topic deserves relative to other topics. Nobody outside Wolfram Research pays much attention to small UTMs (look where the most recent academic review was published), except when there's cash to be earned. It's effectively pop-compsci or pop-math. Pcap ping 05:26, 17 September 2009 (UTC)

- That paragraph, and the corresponding section in universal Turing machine#Smallest machines needs to be almost entirely rewritten based on this survey, which accounts for the different definitions of universality, which do matter when looking for the smallest machine. Alex Smith's machine doesn't even fit one of these weaker definitions, because the tape in his machine uses an infinite non-periodic initial condition; basically the Wolfram Research staff, which didn't even consult the supposedly official committee of academics, could accept any definition of universality they wanted, and the definition was never spelled, even after the prize was awarded. Vaughn Pratt's summary of concerns—mathematical and social. Discussing in detail these issues is way beyond the scope of this article, so it should be relegated to the sub-article. I'd suggest just giving here a general idea that there exist standard small machines, weak small machines, and the uniquely-nonstandard 2-3 machine, the universality of which is still in doubt in academia. Pcap ping 09:05, 17 September 2009 (UTC)

- I agree. What you wrote about 'preloading' the tape is interesting, though. (When I read this I recalled clever work done by Wang in the 1950's.) And the weirdness seems fun. Like the Turing tarpits. This sort of research belongs in either the UTM article or even a sub-article off of that one such as e.g. " Smallest Universal Turing machine "; that way editors can expand to their hearts content. A possible approach for the Turing machine article is the Busy beaver approach; folks constantly update the article with the busiest beavers and their results. But they keep it tidy. You could prune the Turing machine article's section even more by reporting the current smallest UTM (with any provisos) plus a link to UTM or directly to e.g " Smallest Universal Turing machine ". Bill Wvbailey (talk) 13:00, 17 September 2009 (UTC)

LEGO Turing machine

IMHO very interesting "hardware implementation of a 'Turing machine'" (per above post by User:Nebul4 on 22 June 2008) constructed of LEGOs at Aarhus University by Mikkel Vester, Sean Geggie, Martin Have, and Anders Nissen

A blog about the project at http://legoofdoom.blogspot.com/2009/01/conclusion.html . Also includes demonstration video. Video also available on YouTube.

I understand that hardware implementations of Turing machines are not "real" Turing machines, nevertheless I think that many people would find an article about them interesting. Hardware implementations of the Turing Machine ??

-- 201.37.230.43 (talk) 00:33, 30 January 2009 (UTC)

I second this! 188.124.129.218 (talk) 23:43, 28 October 2009 (UTC)

As long as the entries are not original research, i.e. as long as we can trace the sources back to published articles e.g. in school newspapers, on-line journals, papers, etc. The more reputable the better. Transient un-archived blogs are iffy in my opinion; you may want to inquire deeper into wikipedia policy on what is okay and what isn't okay as a source. . . . I've used on-line articles, newspaper articles, even college alumni magazines etc, but more ephemeral "posts" such as blogs and Youtube videos. . . hmm, I don't know. Also, there may be copyright issues you have to attend to. Whatever . . . if there's pedagogical or entertainment value in it, then why not write an article about it? Be bold. Sounds like fun. Here's an example of what I would consider to be permissible: By pure accident, via old logic books I downloaded from googlebooks, I've uncovered at least 3 odd-ball mechanical devices designed in the late 1800-early 1900 era to analyze a "syllogism or any other simple logical argument" -- see Algorithm characterizations for more about two of them; this info could make an article if I were willing to do more research into the original journal papers. For another example of a "tarpit", these being programming rather than mechanical, see Turing tarpit. On the other hand, the following are examples of O.R.: Many years ago I wire-wrapped a Post-Turing machine out of HC-CMOS parts with a shift register "tape" 32 bits long and a table of 256 instructions stored in a RAM. I was able to run a 4-state busy beaver, and I wrote the universal (U-machine) "code" for it and then ran a tiny program using the U-code. Again, the fact that I did this and particulars of the designs are examples of O.R. and not suitable for entry into an article. Bill Wvbailey (talk) 04:14, 29 October 2009 (UTC)

Good article, a few Possible Additions

Good article but still may still need a little something, hope some of this helps -

First (I may have missed it) but there needs to be a simple section in the intro section on the equivalence between Turing Machines and all computers.-

It is a fundamental general rule that all workable computing devices are at some level ultimately equivalent and the standard model device used for this definition is the Turing Machine or TM. All computers ultimately are Turing Machines and all modern computers ultimately evolved from Turing Machines. Although this is only a generalization it is still very strong and useful all the same.

- - - -

- My take on it is this: A Turing machine is a mathematical ideal, a theoretical construct. TM's don't exist except in the mind, and there only as an approximation. In theory a TM can simulate any computer (minus the I/O problem, because Turing machines assume that the input is on the tape before it starts, and then it prints only to its tape), but the converse is not true: no computer can truly simulate a Turing machine except in those limited cases where limited tape/memory is okay for the calculation at hand and the calculation is not so enormous that it exceeds the space- and time-dimensions of our universe-- see Minsky's quote at Halting problem. Moreover, a TM never makes mistakes because of e.g. "noise" or "part failures" or "mistaking symbol "x" for symbol "y" " on its tape. And a TM can work as slow or as fast as we want it to work (i.e. its "clock" can be infinitely fast -- its parts are not governed by the speed of light). A TM doesn't burn power, nor does it (the TABLE, the HEAD and the TAPE) occupy a volume of space (a TM is not bounded by spatial dimension). Thus a TM can compute anything that is computable (by humans or otherwise: imaginable and beyond-imaginable), at least that is the theory. And a computer cannot, because it is space-time limited. My point is: it's pedagogically risky to confuse the two. Maybe others have a take on this. Bill Wvbailey (talk) 15:32, 15 April 2010 (UTC)

I have well over 10 years of experience working on Strong AI and a lot of this is centered on Turing Machines.

A more formal more abstract definition that removes some minutiae-

A Turing Machine is defined as a Machine with a finite number of internal states that control its behaviour. These states are used to drive a control paradigm that drives a feedforward and feedback loop involving a memory which includes state and control information. Information from memory controls the state of the machine and vice versa. In short a Turing machine is generally controlled by sequences of instructions that are stored in its memory space.

There are three big differences between real computers and the Turing Machine model, in the efficiency of the central multiplexer that interfaces between the memory and control state logic, in the size of the memory window, and in the complexity and capability of the 'internal' state logic itself. A fourth minor difference is that being notional Turing Machine models have non-finite memory size.

Turing Machines are not well behaved because logic is defined by small finite additive hunks and a large memory space which together create a near infinite phase space. Introduce even the smallest instability into its control set (say a single wrong bit) and a Turing Machine can behave almost completely unpredictably. For this reason real Turing machine designs generally always follow extremely strict sets of control paradigms -

- Rigid synchronous clock based control.

- All logic and calculation and memory done with zero defect filters, ie using digital numbers.

- At information level multilayered control hierarchy, usually redundant and with safety nets.

- Strict logical control design hierarchy, ie programmatic control.

It is interesting to note that most of the above can be applied to brains and the argument about equivalence between Turing Machines and brains can be answered almost certainly that brains are Turing Machines to some degree. An addendum to this is that brains may use exotic physics to achieve some their capabilities, as was suggested in Roger Penrose's 'The Emperors New Mind' although this does not preclude Turing Machines there either. Although such physics is still in flux many computational processes are probably not otherwise computationally computable especially considering the limited processing speeds of organic neural networks, perhaps the biggest of these is brain development itself. (any further would be OR)

Lucien86 (talk) 14:22, 15 April 2010 (UTC)

Comparison with real machines: DFA or LBA?

The section Comparison with real machines compares to a deterministic finite automaton. Yes, this is a female dog to analyze due to the combinatoric explosion of states. But a linear bounded automaton is a better compromise model: it is identical to a Turing machine except that the tape cannot advance past a length specified by the input. Why does the article make this absurdly detailed comparison to DFAs yet not mention LBAs at all? --Damian Yerrick (talk | stalk) 22:26, 31 July 2010 (UTC)

Informal description section

I believe the following statement may be worded poorly:

In the 4-tuple models, erase or write a symbol (aj1) and move the head left or right (dk) are specified as separate instructions.

I think the "separate instructions" should be "a single instruction". If I read the section correctly, the 5-tuple has separated instructions.

Pekechoo (talk) 17:11, 28 March 2009 (UTC)

- Also, In my opinion, the informal description is too informal - in that sense that it gives a wrong impression of what makes a Turing machine. Currently, the informal description says More precisely, a Turing machine consists of [...among other things...] A TAPE which is divided into cells, one next to the other. This is wrong, as, with respect to the given formal definition, the tape is not part of a Turing machine. The Turing machine operates on a tape. If the tape was part of a Turing machine, then merely changing a tape cell's state would make up a different Turing machine. Please correct me if I am wrong. --Abdull (talk) 10:58, 4 September 2010 (UTC)

The term "Turing machine"--coined when, where, and by whom?

A few online sources (M-W, Online Etymology Dictionary) give 1937 as the year the term "Turing machine" was coined, but they don't give a source, and that date seems too near in time to the publication of the paper. When was the term first used, in what publication could it be found, and who coined it? Robert K S (talk) 03:36, 26 January 2011 (UTC)

- According to the OED, it was on page 43 of Church's review of Turing's Entscheidungsproblem paper: Church, Alonzo (1937). Journal of Symbolic Logic. 2 (1): 42–43 http://www.jstor.org/stable/2268810. Retrieved January 28, 2011.

…a human calculator, provided with pencil and paper and explicit instructions, can be regarded as a kind of Turing machine.

{{cite journal}}: Missing or empty|title=(help). —Mark Dominus (talk) 04:58, 26 January 2011 (UTC)

Wrong delta function definition?

Shouldn't delta function go from Q x Gamma \ F -> Q x Gamma x {L, R}? Currently machine can't finish, since it never reaches any f from F. — Preceding unsigned comment added by 31.147.181.170 (talk) 12:44, 14 April 2012 (UTC)

Question about the article

It's written in the article that Tuting machine formally defined as a *7-tuple*, but it looks to me more similar to a group since in my opinion there's no imporance to the order, and there's nothing which can appear more than once in that "tuple". Why it's not true? 85.65.73.40 (talk) 12:36, 12 August 2012 (UTC)

- To approach the question: In its fully-substituted form e.g. the example of the busy beaver, the 7-tuple consists of 7 symbol strings each inside braces { }, i.e. { 1, 0 }, etc. Suppose you were given any Turing machine specification, and then you were to randomly shuffle the 7 symbol-strings around inside the 'tuple, could they be unpacked by a mechanism (a TM) and assigned to another mechanism (a TM) that could build the TM specified by the 7 symbol-strings? Or does the unpacker TM need the order to do the unpacking? My guess is YES the unpacker needs the order . . . but you could append a unique symbol (say the 7 symbols from the generalized string, which are unique) to each string in a defined location (say, on the left), thus creating 7 ordered sub-tuples, and you'd group those as a "set" or "collection". What earthly good this would serve I have no idea, and it may be O.R. to boot; I've not seen this in the literature. BillWvbailey (talk) 14:36, 13 August 2012 (UTC)

LEGO Turing machine

You might want to add this LEGO version to the paragraph "Comparison with real machines": [4]. This version allows to see the similarity to the Turing machine in a better way. Galzigler (talk) 17:20, 10 December 2012 (UTC)

{mergefrom|Multi-track Turing machine}

The following discussion is closed. Please do not modify it. Subsequent comments should be made on the appropriate discussion page. No further edits should be made to this discussion.

This proposal was re-targeted to Turing machine equivalents in 2009, so I have archived it to avoid confusion. Moonraker12 (talk) 12:26, 15 February 2013 (UTC)

A newbie editor just created Multi-track Turing machine. Would someone please eyeball it, and merge if appropriate? Thanks. -- Fullstop (talk) 20:45, 1 March 2009 (UTC)

Sorry about creating the stub article, I have now expanded it to the point where merging it with Multi-tape Turing machine may not be appropriate, though feedback on the issue would be apreciated. (I forgot to log in so the page history will only show a anonymous user) Jsorr (talk) 06:08, 2 March 2009 (UTC)

Actually i believe it would be much more suitable to this merge article with with turing machine equivalents rather than Multi-tape Turing machineJsorr (talk) 09:29, 2 March 2009 (UTC)

- I'd never heard of a "multi-track Turing machine" until this. Where in the literature is this referenced? If I understand the article, it is just as if someone were to slit a single-track tape and write, from a single (multi-track) head, multiple symbols on the now-multiple tapes, and then read these symbols from the single(multi-track) head. So at each square the machine must concatenate the square's multiple symbols into a single "symbol" and then uses this single symbol? I don't see how this notion is anything different than a single-tape machine; it just expands the available number of "symbols" to the cross-product of the original symbol collection. For example, if the machine's multi-track head can write 3 symbols {B,q,r} (where B symbolizes the blank) then on three tapes we'd have 26 symbols + the all-blank (B,B,B), listed here as ordered pairs in the collection: { (B,B,B), (B,B,q), (B,B,r), (B,q,B), (B,q,q), (B,q,r), . . . (r,r,r) }. Each one of these can be considered a single symbol or "blank (B,B,B)". Bill Wvbailey (talk) 16:30, 2 March 2009 (UTC)

I just added appropriate references. I believe that you are correct on how the machine works. I should have properly explained its use which i have not done yet. If you begin with a multitape Turing machine where all heads move independently, there is a simple algorithmic procedure for the construction of multi-track Turing machine with one read/write head only.

Multitape Machine M:

| Tape 1 | B | a | a | c | b |

| Tape 2 | a | b | c | a | a |

where the tape heads are both positioned at the symbol c, and capital B is the black symbol.

you can construct a multi-track machine as follows where X and # are symbols not in the language (note that the original machine has k tapes this one has 2k+1 tracks.):

Multitrack Machine M':

| Track 1(Tape 1) | B | a | a | c | b |

| Track 2(Tape 1 head position) | X | ||||

| Track 3(Tape 2) | a | b | c | a | a |

| Track 4(Tape 2 head position) | X | ||||

| Track 5(# in first position only) | # |

states are 8 tuples in the form [s,qi,x1,x2,y1,y2,d1,d2] Where qi is an element of Q,xi,yi are elements of ∑ union D and d1 is an element of {L,R,S,D}. Transitions on the first machine can be simulated by:

1) find1; moving to the right on track 2 until X is read and recording the symbol on above it (track 1). Move back using # on track 5 to properly reposition the tape. state [f1,qi,x1,D,D,D,D,D] is entered where x1 is the track1 symbol.

2) find2; moving to the right on track 4 until X is read and recording the symbol on above it (track 3). Move back using # on track 5 to properly reposition the tape. state [f2,qi,x1,x2,D,D,D,D] is entered where x1 is the track1 symbol.

3)state [f1,qj,x1,x2,y1,y2,d1,d2] is entered.where qj,y1,y2,d1,d2 are obtained from the transition delta(qi,x1,x2) on the original machine M.

4)print first symbol p1. move right to the X in track 2. move the X in the direction d1. write y1 on track 1.

5)print 2nd symbol p2. move right to the X in track 4. move the X in the direction d2. write y2 on track 3.

Terminate when state [f2,qi,x1,y1,D,D,D,D] is entered where qi was an accepting state of the original machine.

This explanation probably needs more explanation and revision to go into the actual wiki article . see if it makes sense then i can add it (or anyone can add it if it makes sense) to the article with revisions. probably could make the article much more concise overall as well(Multi-track Turing machine). Thanks for feedback so far. Jsorr (talk) 03:36, 4 March 2009 (UTC)

- I'm confused by your example. You show the head(s) aligned with the "c"s, but not in vertical alignment. If all the tapes move together, and there are multiple heads and they are fixed, wouldn't they all be aligned vertically? Like an old 8-track tape player, or a fancy studio multi-track tape recorder (I doubt there are any of these left nowadays). As I remember people had to "align the heads" because of vertical skew from one recording (or playback) to another. Ditto for floppy-disk recorder/writers. Technically, it won't matter if the heads are offset -- as long as they don't move and the tapes don't move differentially to one another. Is the point of this model to demonstrate/prove that "head skew" isn't an issue? [It might become an issue if the speed of light or speed of signal propagation is included in the model: Imagine a two-headed machine with one head located 186,000 miles away from the other head. See Robin Gandy's 1980 "Church's Thesis and Principles for Mechansisms", Barwise, Keisler, Kunen, eds. The Kleene Symposium, North-Holland Publishing Company pp. 123-148 ] Bill Wvbailey (talk) 15:24, 5 March 2009 (UTC)

The point of this model is that you can begin with a machine where the heads are not only not aligned but also doing different things and then construct a model with one head. For example, the original machine M could have one head stay, another head move left and a third head move right during a transition, yet it is still possible using this method to create a machine M' with only one head that can simulate this transition and all other transitions of the machine. Jsorr (talk) 14:49, 8 March 2009 (UTC)

- Okay, but how does this model differ from a multi-tape machine, where the tapes move independently but the heads are fixed? Bill Wvbailey (talk) 17:48, 8 March 2009 (UTC)

I agree that Turing machine equivalents is where this should be merged (as proposed near the start of this discussion). So, I've changed the tags accordingly. Pcap ping 22:56, 1 September 2009 (UTC)

- This discussion ended in September 2009 with moving the target of the merger to Turing machine equivalents. There has been no support for the merge in the past three-and-a-half years (in fact no discussion at all) so I’ve closed it as stale.

- However, in the meantime we now have articles on multi-track and multi-tape Turing machines; I’ve opened a discussion about this at Talk: Turing machine equivalents if anyone here wishes to comment. Moonraker12 (talk) 12:35, 15 February 2013 (UTC)

Can a HDD be defined as an implementation of a TM? Like a TM, it has a read/write head, and can read or write only from one place at a time. The disk is the tape. Unfortunately, it's also quite slow, as a TM is. Galzigler (talk) 21:19, 25 March 2013 (UTC)

- I'll try to answer it. Most commentators consider the "Turing machine" to be the finite state machine (FSM) that controls the tape, not the tape itself. It's not clear whether the tape's "read/write head" and "handling mechanism" are parts of "the tape" or the FSM. In the simplest form (Post-Turing machine) the FSM commands the tape-handling mechanism to move the tape LEFT or RIGHT one square (note the integer nature of the tape), the FSM can command the tape-head to PRINT or ERASE the square under the read/write head. Finally, the FSM inputs the status (square printed or blank) of the square under the read/write head. In a sense the whole thing while in motion (FSM + tape-handling + tape) constitutes a computation; you cannot have a full-fledged "Turing machine" without a memory associated with the finite state machine, nor can you have a computation. There's another model, the Post model, which has a man who, per a list of instructions, moves from room to room marking or erasing a piece of paper he finds there (only one mark and one paper allowed per room). So the man takes the place of the tape-handling mechanism and read/write head. And the man's instructions are the FSM, and the rooms and the paper in each room is the tape. But that's why by itself a disk drive (a memory + tape-handling + read/write head) is not a Turing machine. Also, there's another fussy problem: a TM has provided to it 'as needed' an "unbounded" amount of memory. So your disk drive has to be a bank of disk drives that can be extended ad infinitum. Bill Wvbailey (talk) 14:04, 27 March 2013 (UTC)

Turing Machine Description

Q and are defined as finite sets. I believe that it might be a further restriction that these sets be non-empty. The edition of Ullman and Hopcroft I have just defines Q as a set of states, and is defined merely as the tape alphabet. When I've seen these terms used in the context of formal language definitions in my undergraduate theory of computation class, "alphabet" and "states" were usually defined with the further restriction that the sets be non-empty. I've gone ahead and changed the definitions in the article.

Yes. This has also come up in the context of Kolmogorov complexity; see the talk page about counting null strings re the formula in the article. I am not satisfied that that particular issue has been thoroughly addressed. Bill Wvbailey (talk) 18:35, 10 July 2010 (UTC)

imho, the codomain of the transition function should be Q x Gamma x {L,R} instead of Q\F x Gamma x {L,R}, because with the current definition, the Turing machine can never get to the final state. Or if I am wrong, the page should explain why...

128.178.158.117 (talk) 14:45, 23 March 2012 (UTC)sam

Execution of TM, TM as acceptor/partial function

I miss a formal definition of the execution of a TM. In particular, that should clarify the role of final/accepting states. Now, the words final and accepting are not further explained in the article. For instance, does a TM halt in a final/accepting state, or merely pass through it, signaling the event to the outside world (like a finite automaton)?

I also miss the various ways in which TMs can be used to define abstract computations. In particular, how a TM accepts a word (something like: put the word on the tape, head on its leftmost symbol, accept when hitting a final state, reject otherwise). Or, how a TM computes a partial function (something like: put the input on the tape, head on the leftmost non-blank symbol, run the TM until it halts, take the final tape configuration as output).

Wstomv (talk) 19:18, 5 May 2014 (UTC)

Confusing exposition in the symbols.

In the section "Turing machine "state" diagrams" the specification of symbols for the Turing machine to write on the tape changes from the previous 0/1 to P/E. I can understand P = "Print" = 1 and E = "Erase" = 0, but it is inconsistent with the general notation and needs some explanation (or better, be changed in line with the rest of the text). 140.180.242.122 (talk) 04:38, 19 April 2015 (UTC)

Different 3-state, 2-symbol busy beavers?

It seems that throughout Wikipedia, there are different 3-state, 2-symbol busy beavers used.

- In this article, we have

| Tape symbol | Current state A | Current state B | Current state C | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Write symbol | Move tape | Next state | Write symbol | Move tape | Next state | Write symbol | Move tape | Next state | |

| 0 | 1 | R | B | 1 | L | A | 1 | L | B |

| 1 | 1 | L | C | 1 | R | B | 1 | R | HALT |

- In the Busy beaver article, we have

A B C 0 1RB 0RC 1LC 1 1RH 1RB 1LA

Does this mean that there exists more than one 3-state, 2-symbol busy beaver? --Abdull (talk) 10:40, 4 September 2010 (UTC)

- Correct. The first one halts after 13 steps with six 1's as output, the second one halts after 14 steps with the same output. Joachim77 (talk) 17:37, 10 September 2010 (UTC)

- so only the second one is the real busy beaver because it runs one more steps? 140.180.242.122 (talk) 04:40, 19 April 2015 (UTC)

Partial function

"is a partial function called the transition function". What will machine do if transition function is not defined for pair (current position, current state).? 85.140.206.60 (talk) 09:27, 9 June 2015 (UTC)

- It was mentioned somewhat vaguely under Turing machine#Informal description, Nr.4: "In some models, if there is no entry in the table for the current combination of symbol and state then the machine will halt; other models require all entries to be filled." I added a more formal description under Turing machine#Formal definition. - Jochen Burghardt (talk) 12:50, 9 June 2015 (UTC)

Is it a mathematical model?

I tried linking it to mathematical model but it got undone with the reply: it's not because that page refers to modelling real systems, whilst "while a TM can exist only as a thought experiment, due to the unlimited tape)"

Should one or the other change? - the page 'Mathematical Model' could be extended to include 'though experiments' (such as the turing machine). - Or the statement, "a turing machine is a mathematical model" could be changed.. Fmadd (talk) 17:20, 29 May 2016 (UTC)

- I'd prefer the 2nd solution. In fact, your wikilink drew my attention to the sentence, and I think it wasn't good before your edit. So just reverting your edit wasn't fully appropriate - sorry for that. As far as I remember, textbooks usually introduce a TM as a mathematical object, often some kind of 7-tuple of sets and symbols, as in Turing machine#Formal definition. - Jochen Burghardt (talk) 17:39, 29 May 2016 (UTC)

ok I can't really comment further - but I'd be very curious to know what the precise solution is here. I don't know where you'd draw the exact boundaries between such things. I would have thought a mathematical model could include thought experiments, but if there's a more accurate term here, fair enough. Fmadd (talk)

- I wish this stupid meme would die already! A TM is not* just a thought experiment, it can actually be built. It does not contain the tape! Those who say it must contain its tape (point it out in the mathematical description - good luck!) miss the point entirely. Rp (talk) 09:15, 30 May 2016 (UTC)

You are right in that the tape isn't actually mentioned in the formal definition. However, it is tacitly understood that e.g. after writing a symbol 'A', moving n fields left, moving n fields right, an 'A' will be read again. In this sense, all algorithms running on a TM rely on the tape geometry. What I intended to express by "thought experiment" was that the above example cannot be observed in the real world if n is chosen sufficiently large. - Jochen Burghardt (talk) 21:33, 30 May 2016 (UTC)

- Jochen Burghardt: Almost. The part of the tape that has been written is part of the definition of how a TM operates, so there is nothing tacit about the fact that after writing a symbol 'A', moving n fields left, moving n fields right, an 'A' will be read again. However this doesn't describe what happens when the tape head runs off the part of the tape that was given as the finite input or written by the machine before. One way of dealing with this is to consider only machines that provably never do this when started on an input that is surrounded by a special 'blank' character on both sides, by constructing the machine in such a way that whenever it moves across that character, it will always write it to the tape before doing anything else. Another is to say that whenever it reads an unwritten non-input cell it always reads a blank. Neither requires the introduction of an infinite tape. "Infinite" tape is just a lazy way of saying that the written part of the tape can grow arbitrarily large. Rp (talk)

- Fmadd: Yes, it is a mathematical model. It is purely mathematical and it models something in the real world, namely a particular way for a machine to operate. Rp (talk) 00:01, 31 May 2016 (UTC)

Turing Acceptable over Decidable Note Required & Usage of term Undecidable shouldn't be redundant across.

This Article uses the word Undecidable which is across the article, should be removed considering the advancements that Micro Processors, Micro Controllers brought to the world which is predictable by at the far most. Looks like Un-Computability jargon's can't be ridden or wire-framed by Metrics, so please accord it so.

And clear note for,- Turing Acceptable over Decidable is Required. Else should be formed as on another article, this is because Alan Turing clearly formulated how things that Turing Machines processes,- so.

Here I didn't made any debate. So Editorial Team, please accord so by. —Dev Anand Sadasivamt@lk 08:22, 13 September 2016 (UTC)

- Undecidable is standard mathematical terminology for this concept. And your reasoning for why not to do so is unclear. What do microprocessors have to do with it? —David Eppstein (talk) 17:12, 13 September 2016 (UTC)

The Crown

In this series, they introduce handshaking and the black box theory without using the actual terminology. That goes for Turing machine, as well, if I recollect correctly. I won't comment on the psychology or portrayal of his personal relationships or the attempt to depict him as Autistic or suffering from Einstein syndrome, either. — Preceding unsigned comment added by 2604:2000:E940:B400:FD04:93A2:68C0:13AD (talk) 00:52, 10 April 2017 (UTC)

Erasure vs writing a "null" symbol such as 0"

Erasure renders a square "empty of symbol" as opposed to "printed with a null" symbol such as 0. Nothingness is not the same as a symbol 0, or "null". This is why "erasure" is more primitive than "printing a 0 or a null character". The same idea exists in set theory: think of an "erased" square as an empty set. Suppose we have a sequence of 4 squares (symbolized here as [ ][ ][ ][ ] ) with 3 marks and 1 blank in them e.g. [|][ ][|][|]. If our machine works only on "blanks" and "not-blanks" (i.e. a Post-Turing machine), we could fill the non-empty squares with any mark (smudge) that the machine can detect as "not-empty" e.g. [$][ ][%][@]; the two strings of squares are equivalent. BillWvbailey (talk) 01:22, 27 September 2017 (UTC)

Sigma for Busy Beaver should not be {0} instead of {1}?

Doru001 (talk) 17:20, 25 January 2018 (UTC)

Error in the second state diagram?

In Section 4.3, this diagram shows the read/write head at the third tape cell from the left, but the transition from C to HALT should move the tape right one cell. (I wrote a Java program that simulates a Turing machine, and it does move one cell right at the end.)

Is the diagram in error?

Stevensrmiller (talk 16:17, 23 December 2015 (UTC)

- This was created by a “machine” simulated on an Excel spreadsheet, so what you see there was actually executed, but then I massaged an image of it into a drawing file: State_diagram_3_state_busy_beaver_4_.JPG . I was lucky to find this original drawing file, and some others, and I noticed that in one version of the state diagram the transition from C to HALT is 1/P,N, whereas on the diagram you’re seeing it is 1/P,R. So you are correct, to be obeying the state diagram it should HALT after moving the tape one square right. Now the question is: which is the "correct" version of the diagram, 1/P,N or 1/P,R? I can patch the JPG drawing, but I can’t patch the nice drawing above it. So it might make sense to keep the state diagram and patch the diagram to show one last move to the right. Thoughts? BillWvbailey (talk) 15:41, 24 December 2015 (UTC)

- Sheesh, they changed the upload form. We'll see if this works. BillWvbailey (talk) 15:59, 24 December 2015 (UTC)

I'm following this diagram based on the Markov Chain in the diagram, and I'm not sure if it's accurate. Can someone verify? Darkhelmet41290 (talk) 16:22, 21 February 2018 (UTC)

Error in introduction?

Gavinayling (talk) 20:31, 24 October 2019 (UTC) I don't believe the second sentence in this paragraph is incorrect, but I think its placement is misleading. It implies that the limitations on the power of mechanical computation is a problem with Turing's design, rather than being "fundamental" as the first sentence states: "Thus, Turing machines prove fundamental limitations on the power of mechanical computation.[16] While they can express arbitrary computations, their minimalistic design makes them unsuitable for computation in practice: real-world computers are based on different designs that, unlike Turing machines, use random-access memory."

Turing Machine simulator

RafelTricas (talk) 08:32, 8 February 2019 (UTC) Sorry, it is my first attempt to contribute to Wikipedia. I'm teacher and made a Turing Machine simulator in my personal web page. There are more utilities and exercises in that page. I thought it could be interesting to add it but it was reverted as spam or Conflict of interest, I'm not sure exactly why. Either, I'm not sure if this is the place where I should comment on this.

Gavinayling (talk) 20:33, 24 October 2019 (UTC) It's probably inappropriate to link to your own site on Wikipedia. If your resource is a valuable resource, it will be added by an independent party. Hence the Conflict of Interest challenge.

On the Termination options of the TM with Reject

The text states that there is a Reject Option possibility (presumably another Final State option in addition to Accept, although this is not stated explicitly). Then the following text: