Talk:Endianness/Archive 2

| This is an archive of past discussions. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 | Archive 2 | Archive 3 | Archive 4 | Archive 5 |

the principle reasons

Oh, dear. This shoddiness, occurring millions of times across Wikipedia, is one of the many reasons (and typical of many of them) why this will never be regarded as a serious reference work. — Preceding unsigned comment added by 82.68.94.86 (talk) 11:45, 16 July 2013 (UTC)

Signed numbers

I think it might make sense to mention what happens for signed integers. As far as I can these are normally converted by two's complement before the re-ordering of bytes takes place. This may seem rather subtle (depending on how one understands endianness) so could be worth mentioning. At least it isn't immediately obvious what the representation should look like. Is this overly technical and specific to implementations to talk about? Alexwright 00:07, 30 November 2006 (UTC)

- I deliberately avoided them :-) Mainly for two reasons: first, at least ones' complement, two's complement and sign/magnitude should be covered, the most common and the three explicitly allowed by C99. Secondly, I was afraid of a whole burst of edits dealing with the "position" of the sign bit. What I've noticed is that the issue of "bit endianness" (or even bit "position") is not well understood. However, if we are willing to accept a quite long settling-down period for the article we can begin the effort :-) —Gennaro Prota•Talk 12:48, 30 November 2006 (UTC)

- There's no "re-ordering of bytes" and there's no "conversion by two's complement" unless you are talking about a program that converts data from one format to another. Within a single system, the numbers just are what they are---bits in memory. Nothing "happens for signed integers." Every multi-byte integer has a "big end" a "little end". Whether you choose to interpret the integer as signed or unsigned is completely independent of which end is which. 129.42.208.179 (talk) 15:40, 6 February 2014 (UTC)

Regression

The article was cleaned up, this section has hence just become deprecated. Bertrand Blanc 10:38, 5 December 2006 (UTC)

Following section in the article is a regression compared with what we converged onto. Then, please remove or be accurate sticking on the level reached in the article rewritten by Gennaro Prota. Thanks.

For example, consider the number 1025 (2 to the tenth power plus one) stored in a 4-byte integer:

00000000 00000000 00000100 00000001

| Address | Big-Endian representation of 1025 | Little-Endian representation of 1025 |

|---|---|---|

| 00 | 00000000 | 00000001 |

| 01 | 00000000 | 00000100 |

| 02 | 00000100 | 00000000 |

| 03 | 00000001 | 00000000 |

I may argue that following section is OK as well:

For example, consider the number 1025 (2 to the tenth power plus one) stored in a 4-byte integer:

00000000 00000000 00000100 00000001

| Address | Big-Endian representation of 1025 | Little-Endian representation of 1025 |

|---|---|---|

| 00 | 00000000 | 00000100 |

| 01 | 00000000 | 00000001 |

| 02 | 00000100 | 00000000 |

| 03 | 00000001 | 00000000 |

Bertrand Blanc 14:09, 29 November 2006 (UTC)

I have a very simple explanation of endianness. Aword occupies 32 bits and this word may be referred by either the address of the Least Significant Bit (Little Endian) or the address of the Most Significant Bit (Big Endian). The following C program can identify whether a machine is Big Endian or Little Endisn:

int main(void) {

int a =1;

if(*(char*)&a == 1)

printf("The machine is Little Endian\n");

else

printf("The machine is Big Endian\n");

return 0;

}

Some programs dealing with string handling may be dependent on endianness and they will generate different results for different machines. However, it is possible to write Endian Safe program

I've just noticed another potential regression in section "LOLA: LowOrderLo#Address". A new concept called LOLA seems to be introduced. When I read the section, I read middle-endian (byte-swap feature within atomic element). Since middle-endian was already defined, what is the purpose to have a section about LOLA? I probably missed something, may you please clarify a notch further, thanks. Bertrand Blanc 10:47, 1 December 2006 (UTC)

Endianness in Communication

We can read in Endianness article within Endianness in Communication section: The Internet Protocol defines a standard "big-endian" network byte order.

This information is not enough, we only know atomic units order within a word. What is atomic unit/element size? What is word size? What byte order within an atomic unit? What is bit order within bytes?

Without these information, the data UNIXUNIX can be received as UNIXUNIX or NUXINUXI or XINUXINU or IXUNIXUN (other possibilities do exist) without any clue about reestablishing right UNIXUNIX order.

Since computers can speak together thru the Internet without any issue, I assume that these information are embedded somewhere, maybe in datagram headers within a low-level OSI layer.

To go in deeper details, assuming the issue is known as "NUXI": the only configuration to obtain this result (in big-endian) is with 2-byte atomic element size, without byte-swap (i.e. not middle-endian). The word size seems to be greater than 16 bits. Most significant bit within byte seems to be received first. This interpretation is based on an unformal assumption which may easily be jeopardized with other oral or unformal assumption, like for example "everybody knows that least significant bit within byte is received first" (eventhough this assertion is not obvious and may be wrong in some systems).

Furthermore, if the configuration is really 2-byte atomic unit based per "NUXI", this contradicts sentence coming from the article explicitely stating byte order and not 2-byte order:

To conclude, this is confusing. To be investigated further to provide clear and accurate definition/explanation/wording in the article.

- This information is not enough, we only know atomic units order within a word.

- "What is atomic unit/element size?" -- 1 byte

- "What is word size?" -- varies depending on the field, defined by the specific protocols, text is just a sequence of bytes with no real concept of words at all.

- "What is bit order within bytes?" -- irrelevent to IP and higher level protocols, IP is defined in terms of sequences of bytes.

- Afaict NUXI was an early problem that was related more to code compatibility than to communications and was only notable because of the fact it turned into a name for endiness problems in general. Plugwash 17:58, 27 April 2007 (UTC)

- Virtually all internet protocols describe data as sequences of "octets", and an octet is defined as a number, n such that 0 <= n < 256. There are standard ways of encoding larger numbers and negative numbers from sequences of octets, and there are standard ways of decomposing octet sequences into bit sequences, but none of it has anything to do with how data extracted from those sequences are stored in the memory of any computer. The internet protocols are only concerned with data that are "in flight" from one system to another, and (less often) with data that are stored as sequences of octets on some storage medium such as a hard drive.

- They use the word "octet" because back when the internet was new, the word "byte" could mean 6, 7, 8, or 9 bits depending on where it was used. 129.42.208.179 (talk) 15:54, 6 February 2014 (UTC)

OPENSTEP reference removed

Just removed this from the article:

- The OPENSTEP operating system has software that swaps the bytes of integers and other C datatypes in order to preserve the correct endianness, since software running on OPENSTEP for PA-RISC is intended to be portable to OPENSTEP running on Mach/i386. <!-- is this automatic or manual and if its manual how does it differentiate it from conversions on other platforms?-->

This is irrelevant. AFAIK all major operating systems have byte swapping functions in the C library. Unless there is something that sets OPENSTEP appart, this does not belong here. (as someone noted in that comment) I suspect this is just from someone reading another article about OpenStep, coming to the wrong conclusion, and polluting the Endianness article with this. – Andyluciano 17:33, 9 February 2006 (UTC)

Bit endianness in serial communication protocols

So there's been a "battle" over endianness, but what has been the bit endianness? What's the bit endianness for some of the common data link protocols: ethernet, FDDI, ATM, etc.? Cburnett 16:22, 7 March 2006 (UTC)

Just a note: If my opening argument in the section below is correct, BIT-endianness only matters if bits are passed in serial fashion; if they're passed in parallel, bit numbering order is not the same as endianness. phonetagger 19:28, 7 March 2006 (UTC)

- Endianness only ever mattered because of (a) portable programming languages (e.g., C) which let you overlay different data types on the same chunk of memory, and (b) because of programs that "serialize" data by simply copying memory bytes out to a file or a network connection. Those concerns only matter down to the byte level. At the bit level, there are no portable language constructs that let you access individual bits in a way that varies from machine to machine, and there are no persistent storage formats or network protocols that switch the order of bits within bytes when the data goes from one architecture to another. 129.42.208.179 (talk) 22:19, 6 February 2014 (UTC)

Bit endianness in parallel connections (system memory, etc)

Although I see a paragraph discussing bit endianness in the endianness main article, I have been under the impression for years that the "odd" bit numbering of PowerPC processors (and I'm sure some other processors as well, although I know of none) has nothing to do with endianness. From time to time I've heard programmers or hardware engineers say something about how PowerPC is big endian due to the reversed bit numbering (bit 0 being the MSbit instead of the LSbit), and immediately some other more experienced programmer squashes the first and says that bit numbering has nothing to do with endianness, "you silly inexperienced fool." (OK, maybe they don't say that last part, but I'm sure the first guy sulks away thinking they did.)

Certainly bit numbering has nothing to do with BYTE-endianness, but can we say that bit numbering has anything to do with BIT-endianness? My own take on that is, "no." Byte endianness is something that we have to deal with on an operational level. It affects the WAY bytes are stored and expressed within larger units (words, longwords). If we pass a data structure containing anything other than bytes (chars) from a little-endian machine to a big-endian machine, someone, somewhere along the way, has to perform byte swapping of anything in the structure larger than a byte. On the other hand, if we pass a data structure from one big-endian machine with bit 0 as the LSbit (Motorola 68K-based) to another big-endian machine with bit 0 as the MSbit (PowerPC-based running in native big-endian mode), we encounter no such conversion problem. On the 68K system, the hardware designer knew to hook up bit 0 (LSbit) of the memory array to bit 0 (LSbit) of the 68K CPU, and all was well. On the PowerPC system, the hardware designer knew to hook up bit 0 (LSbit) of the memory array to bit 31 (LSbit) of the PowerPC CPU, and all was well. Data structures passed from one to the other need not be translated.

If bit-endianness has nothing to do with Endianness, then a stronger statement as to this conclusion should be stated. I have remained confused about Endianness because I have read in Wiki that it refers to byte order in memory, and yet my colleagues continue to use it to describe MSB vs. LSB "first" behavior in serial output, i.e., byte order. I am left learning that sometimes people refer to MSB/LSB-first as bit-endianness, but not knowing if this is correct. It seems as if it is "OK but not preferred", I'd like to see bit-endianness be eliminated if bit order within bytes has nothing to do with endianness, as I suspect. Yet we have repeatedly had problems with bit-order in serial communications for years, with an odd bunch here or there doing things incredibly LSB first. I thought maybe you could refer to Byte (capital B) endian and bit (lower case b) endian, as Endian and endian, or Byte-endian and bit-endian, but am left not knowing if people trying to impress with the use of the term endian should be impressing me or not. I have always liked, used, and been satisfied with the terms "MSB first" or "LSB first", which make sense only when referring to a serial communication protocol definition anyway. 192.77.86.2 (talk) 18:56, 8 September 2014 (UTC)R. Chadwick. Reference is experience and confusion.

I work in a company that produces custom/proprietary embedded systems, and occasionally the HW designer gets confused and connects a device (RAM, flash memory, some ASIC, etc) to a PowerPC backwards, tying bit 0 of the device to bit 0 of the PowerPC. Of course if it's RAM, that's no big deal. The processor can store the MSbit in the LSbit location with no problems, since when it fetches it again it comes from the same place it was stored. From time to time my SW group has had to write special drivers for non-RAM devices that were connected backwards, to deal with the fact that the bits are reversed. That's always fun. But that problem isn't (in my opinion) related to endianness (an ordering convention), it's related to a numbering convention.

Comments appreciated! phonetagger 19:28, 7 March 2006 (UTC)

- Also fun is apparently some dual endian chips (e.g. arm) really need either horrible driver code or different wiring to run in different endiannesses in the same system. Its certainly related to endianness but i'd say its not really endianness because there isn't really a first or last bit in a parallell bus just an arbitary name assigned to some pins. Plugwash 21:49, 7 April 2006 (UTC)

Endianness poetry

Many long years ago, I [kinda] made up a song when we were learning about endianness:

- One little, two little, three little-endians

- Four little, five little, six little-endians

- Seven little, eight little, nine little-endians

- Ten little-endian bytes.

Native English speakers are most likely to "get it" and know the tune than non, but it was fun regardless. Enjoy. Tomertalk 03:05, 9 March 2006 (UTC)

Endianess in danish dates

The article said that the ISO 8601 format is the most common in danish, I highly doubt that. I'm removing Denmark from the list of countries that use little-endian date formats. Exelban 19:34, 10 May 2006 (UTC)

- For the record, the Official Danish date notation is not ISO 8601, but little endian: "13. Dec 2011" or "13/12/2011" . As a native of Denmark I know this from my daily life, but a good reference citation for this is the "locale" files for Denmark in GNU libc, those files for Denmark are actually labelled as being written by the official Danish Standardization organization. In contrast, ISO 8601 is big endian "2011-12-13" .

- However the section on date format endianness has since been edited out in favor of a note that this is incorrect or colloquial use of the word "endianness", so it doesn't matter anymore for this article. 90.184.187.71 (talk) 18:29, 21 June 2011 (UTC)

The World is Mostly Little Endian

Previously, this article barely even mentioned that modern PCs use little endian. Since modern PC's grew out of the Intel x86 based processors, most of the world's computers evolved from little endian architectures and continue to use little endian (even if they can technically run in other modes). That seems like rather relevant information to me, so I've added it. Sorry to the big endian guys, but the fact is, the vast majority of the machines that view this web page will be using little endian. 209.128.67.234 05:15, 24 April 2006 (UTC)

- By number of desktop pcs yes little endian wins for the moment because a little endian architecture is currently dominating that marketplace. Total number of processors running in each mode is harder to guess at (a lot of embedded stuff is big endiant) Plugwash

The original decision to use little-endian addressing for the Intel line of processors was made by Stanley Mazor. The first 8-bit computer, the 8008, was designed by Intel for Datapoint about 1970 and he chose little-endian for compatibility with the bit-serial Datapoint hardware. Ironically, Datapoint never used the 8008. (Ref. "Anecdotes", IEEE Annals of the History of Computing, April-June 2006; http://www.computer.org/portal/cms_docs_annals/annals/content/promo3.pdf) In an earlier interview Mazor is quoted as considering the choice a mistake. (Ref. http://silicongenesis.stanford.edu/transcripts/mazor.htm, note transcript has "endian" as "Indian".) -Wfaxon 11:32, 26 June 2006 (UTC)

Essentially all modern game consoles use PowerPC processors, which are big-endian. mrholybrain's talk 18:32, 11 March 2007 (UTC)

- Actually the PowerPC boots as big-endian but can run either way; see PowerPC#Endian-modes. --Wfaxon 15:51, 27 April 2007 (UTC)

I think your perception is somewhat distorted. For PCs, the x86 family with its little endianess dominates; but only 2% of all processors are PC processors - 98% is embedded systems, which is mostly Big Endian. [1] So it's much closer to the truth if you say "The World is Mostly Big Endian".

Also, "The Internet is Big Endian" (see IP protocol). --80.66.10.152 (talk) 15:55, 20 July 2011 (UTC)

Big/little-endian is a misnomer!

First, little-endian is a lame Intel format. Second, the whole scheme is upside down. I mean, end is last. Big-endian described number that has biggest first. It should be the exact opposite, but this isn't a problem with the wiki, but the horrible common mis-use of the terms. 84.249.211.121 18:51, 22 July 2006 (UTC)

- First, calling little-endian a "lame Intel format" is silly and irrelevant. Second, the scheme is not upside-down. "Big-endian" describes numbers that have the big end first. Just as the big-endians in Gulliver's Travels ate the big end of the egg first. --Falcotron 19:30, 22 July 2006 (UTC)

- I completely agree with misnomer (but of course not with lame). They both should be named little-startian and big-startian, because they start with the least- resp. most-significant bits or bytes.

- Btw, there is an extremely strong agreement among the computer builders, that they address (and specify) a multi-byte field at the left and lowest address, and not at the right and highest address. --Nomen4Omen (talk) 16:03, 12 May 2014 (UTC)

Example programming caveat

Even without endianness problems, this example would also fail for systems with different alignments or byte sizes. Try writing "66 6f 6f 00 00 00 00 00 00 00 00 00 01 23 45 67 62 61 72 00 00 00 00 00" (64-bit alignment) and reading it back on a system with 32-bit alignment, or writing "0066 006f 006f 0000 0123 4567 0062 0061 0072 0000" (16-bit char; 18-bit is left as an exercize for the reader) and reading it back on a system with 8-bit bytes. (Exactly what you get for the latter depends on the filesystem, but it's probably going to be either "\0f\0o", 0x006f0000, "\001#Eg" with both strings unterminated, or "foo", 0x23676261, "o" and/or EOF.) Endianness is just one reason never to dump raw structs to a FILE * if you expect the result to be portable. --Falcotron 04:55, 25 July 2006 (UTC)

- Indeed. Not to talk of data placed in structs :-) I'm in favour of removing that section altogether, as it seems almost to "justify" what's simply bad programming practice. FWIW, one can't expect to be able to read the file back even by just changing the compiler switches (same compiler version, same program, same platform). Binary dumps are almost exclusively useful for temporary files, which are read back before the program terminates. And there's certainly no need to know anything about endianness to write a number and read it back. —Gennaro Prota•Talk 18:02, 10 November 2006 (UTC)

- Just a little addendum: someone may object to my removal of the section by saying, for instance, that if you want to read a binary file where, say, the first four bytes represent an image width and are specified to be little-endian you have to take that into account. That's true, but you only have to consider the *external* format. Here's the "standard" C idiom to deal with this:

typedef ... uint32

width =

( ( (uint32)source[ 0 ] ) )

| ( ( (uint32)source[ 1 ] ) << 8 )

| ( ( (uint32)source[ 2 ] ) << 16 )

| ( ( (uint32)source[ 3 ] ) << 24 ) ;

- And that works regardless of the "internal" endianness. —Gennaro Prota•Talk 18:26, 10 November 2006 (UTC)

Never-endian

Please explain more...

- Probably a joke. Came with these edits, and went with these. It's possible that it's true, but there are no references. --Shreevatsa 16:10, 5 August 2006 (UTC)

- The joke came soon after edits starting with mine that introduced information about 32-bit digital signal processors with a word-addressed memory. They have

CHAR_BITS == 32andsizeof(int) == 1. Thus, when dealing with anything fromchartoint, there is never any endian issue. However, such processors may still have a preferred order to storelong long(64-bit) values andlong(32-bit, 64-bit, or something in between) values. But the phrase has only 27 Google hits and is thus probably non-notable. --Damian Yerrick (☎) 03:26, 10 September 2006 (UTC)

- The joke came soon after edits starting with mine that introduced information about 32-bit digital signal processors with a word-addressed memory. They have

The Answer

Big endian is write, little endian is wrong. (I've been debugging bitfields on an intel mac. I miss PPC) —Preceding unsigned comment added by 150.253.42.129 (talk • contribs)

- Do you have any verifiable sources to back up this claim? --Damian Yerrick (☎) 23:29, 1 November 2006 (UTC)

Origin of little endian

Little-endian cannot come from Gulliver's Travel because the term does not appear in its text. (Follow the reference to the original text to partially verify it.) The original only mentions Big-Endian and breaking eggs on the smaller end or larger end.

Gabor Braun

- Fixed. --Doradus 16:53, 7 November 2006 (UTC)

Note about Byte Layout vs. Hex format

I know that endianness may appear to be very confusing, then I apologize for this note.

A 32-bit register 1-byte adressable is represented from address A, within the range [A; A+3] such as:

100

|

101

|

102

|

103

|

||

4A

|

3B

|

2C

|

1D

|

The HEX format representation of this byte alignement is the value 0x1D2C3B4A since numbers in HEX basis have least significant address value on the right.

- No need to apologize; I'm just not sure why you felt a need for the note. Could you please elaborate?

- [snip]

- I feel the confusion is here: in your mind, "atomic unit width" and "address increment unit" are synonyms. I'm saying that there is no relationship: a system can be featured with 2-byte atomic unit width, and with 1-byte address increment unit. I mean:

- With 16-bit atomic element size:

100+0

|

100+1

|

100+0

|

100+1

|

100+2

|

100+3

|

|||||

4A3B

|

2C1D

|

...

|

3B

|

1D

|

- Perhaps we can avoid confusion by eliminating any numeric address from the figures and only show the "increasing direction", as in:

| increasing addresses --> | |||

3B

|

1D

|

||

- The separation of the units is already illustrated by the borders in the second row, so the numbers above are pretty pointless (as is the choice of a particular initial address such as

100). In effect even the choice of "4A", "3B", "2C", "1D" looks pretty odd to me: what's wrong with "4", "3", "2", "1" or "A", "B", "C", "D"? —Gennaro Prota•Talk 17:24, 10 November 2006 (UTC)

- The separation of the units is already illustrated by the borders in the second row, so the numbers above are pretty pointless (as is the choice of a particular initial address such as

- Very good tradeoff!!! Removing all addresses may be a good thing since, in the sequel, address is not a discriminative feature for endianness. Let's refocus on endianness considering only data. However, the reader must be aware of being very thorough reading at the text: he cannot consider the text as a simple "story" without reading carefuly each word. I tested this kind of high-level definition with plenty of engineers: all of them missed the point...

- I'm reluctant to stick on 1-digit hex number, since bytes are manipulated i.e. 2-digit hex numbers.

Bertrand Blanc 23:05, 10 November 2006 (UTC)

Linguistic Universals Database

Hi guys,

is it just my connection or the links to the Linguistic Universals Database in the footnotes aren't working? —Gennaro Prota•Talk 17:28, 10 November 2006 (UTC)

- As far as I can tell, it's just down right now. The archive's front page still links to the server on port 591, which isn't working. If it were taken down for good, then the archive's front page would likely have been changed. --Damian Yerrick (☎) 17:51, 10 November 2006 (UTC)

- Thanks for checking. Yesterday I couldn't access it either. Let's see in the next days. If you have the pages in your browser cache I'd appreciate receiving a little copy-&-paste by mail :-) —Gennaro Prota•Talk 17:55, 10 November 2006 (UTC)

- PS: Today I asked them by mail; will keep you informed. —Gennaro Prota•Talk 03:08, 12 November 2006 (UTC)

- I was informed that the service would be back in some days. Indeed, I'm happy to see that it is working now. —Gennaro Prota•Talk 10:43, 5 December 2006 (UTC)

Links to clarify endianness in date formats and mail addresses

The discussion referred to in edit summary is at User talk:EdC#Clarification about your edits to the endianness entry. If to be continued, it may as well be here. –EdC 00:55, 2 January 2007 (UTC)

- Eh, if only you listened to what I wrote. Do you realize what disasters you do? Links to redirects, with links to a section which could disappear... exceptions that except for date formats are exceptions... It's because of users like you that we are all stressed here. —Gennaro Prota•Talk 01:02, 2 January 2007 (UTC)

- Is it wrong to link to redirects? I understood that it's fine. Ditto for links to sections; I'll add in linked-from comments if you think there's a danger of those sections disappearing. I'm sorry if my style is so bad that you get stressed; perhaps you should consider a break? –EdC 15:05, 2 January 2007 (UTC)

Lead section

In this edit, I added two paragraphs to the lead section, which others improved later. Gennaro Prota deleted the paragraphs in this edit with no more comment than "cleanup again... sigh :-(". This is the text in question:

- Big-endian and little-endian are the two main kinds of endianness. Big-endian is generally used on computer networks, little-endian in most computers. (Some computers used endiannesses other than those, referred to as "mixed-endian" or "middle-endian".)

- As a non-technical explanation, most spoken languages use big-endian representations for numbers: 24, for example, is pronounced twenty-four in English, meaning the big end (twenty) comes first. Some languages use little-endian, however. As an example, 24 in Danish would be fireogtyve (literally "four-and-twenty"), putting the less significant digit "four" first.

The reasons for my edit were:

- Article too abstract for too long: It's talking about "Endianness as a general concept" and other things which are only academically relevant (if at all). Realistically, if you discuss big-endian and little-endian, you have 95% of the endianness issue covered, the "general concept" being in the other 5%.

- Discussion upside-down: It's misleading that big- and little-endian are buried far down in section 4 under "Examples" (and then with little-endian as a sub-item of big-endian). That hurts clarity and is annoying to readers who expected to see the "big endian" article (which redirects).

- Lead section is not, as it should be, a summary of the article (see below).

- Lead section is pale, jargon-ridden ("integer", "addressing scheme", "transmission order"), and unspecific ("endianness is the ordering used to represent some kind of data" ...). It's also pretty short on concrete facts.

Because of these issues, I mentioned big- and little-endian in the lead section. The points that most computers are little-endian, while network order is big-endian, provide context and some facts. I also added the linguistic example to provide a clear and concrete explanation. This is in accordance with the relevant Wikipedia Guide:

- Normally, the opening paragraph summarizes the most important points of the article. It should clearly explain the subject so that the reader is prepared for the greater level of detail and the qualifications and nuances that follow. (from [2], emphasis mine)

Asking for guidance: I'd like to know what, specifically, is wrong with the reasoning above and these two paragraphs. I thought they provide value to an otherwise lifeless lead section and find it impolite that they get deleted with no more comment than "cleanup again... sigh". --193.99.145.162 14:20, 2 January 2007 (UTC)

- I'm with you on this. User:Gennaro Prota seems determined that this article should be solely about the technical byte-order meaning – which is, admittedly, the original meaning but is not the only meaning used today – and that thus all other meanings, even if they help to explain, should be stripped from the article. –EdC 15:12, 2 January 2007 (UTC)

- I think I have two main problems with the edit you refer to: first it is the classical "local improvement" which enters like an elephant in a crystalware shop in the context of the article. That's an ubiquitous Wikipedia problem: editors rarely seem to have read the whole article; they just kick in. Secondly it is totally rickety, both linguistically and technically; examples: a) "little-endian [is notable] because it is used internally in most computers" -not only that is wrong, but what does "internally" mean? In practice you are *apparently* simplifying, by replacing things such as "storage of integers" or similar with "internally", which appears simple just because it says almost nothing b) "Some computers which today are obscure used endiannesses other than those, referred to as "mixed-endian"... you will agree that this could be in a Dilbert strip, not in an encyclopedia; and its addition clearly shows that you haven't read the whole article, or haven't bothered integrating everything with the "Middle endian" section.

- That said, you can change the article as much as you want. I have lost any hope that Wikipedia can ever aim at anything other than fluctuating quality (and I don't think I'll ever spend again so much time on an article as I did on this one). At least, permanent links exist: without them each time you open your browser it is a new surprise. —Gennaro Prota•Talk 01:02, 4 January 2007 (UTC)

The second sentence of the lead section ("Computer memory is organized just the same as words on the page of a book or magazine, with the first words located in the upper left corner and the last in the lower right corner.") is total nonsense, it confuses memory organisation with the way memory viewers/editors usually represent its contents. The "Endianness and hardware" sections partially clarifies it but than it is too late for many (I even think most) readers. — Preceding unsigned comment added by 195.111.2.2 (talk) 13:23, 11 July 2013 (UTC)

- The second sentence of the lead section is also Anglo-centric. Not all books are read left-to-right, top-to-bottom. Don't treat it as universally true, as you will confuse the analogy and some readers. — Preceding unsigned comment added by 2620:0:10C1:101F:A800:1FF:FE00:55B3 (talk) 15:22, 1 October 2013 (UTC)

PDP-11 Endianness: Integer vs. Floating Point

Unfortunately, the section on PDP-11 endianness is misleading. The PDP-11 stored 32-bit integers in little endian format. It was only the PDP-11 floating point data types that suffered from mixed endianness. I don't want to lose this section, but I can't see any way of clarifying it without introducing integer vs. floating point, which is not presently mentioned in this article. 68.89.149.2 20:02, 16 January 2007 (UTC)

The purpose of introducing PDP-11 was to illustrate a feature of endianness: middle-Endian. The point to keep in mind is middle-Endian, nothing else. Then if you need to clarify the example, feel free to do it, accuracy is much more better. The example may be skipped by readers if found too much obfuscated, but the basics strengthened by middle-Endian definition cannot.

Subsidiary, what may be misleading is the introduction of mixed-Endian term: is it a middle-Endian synonym? Is it jargon? Is it another kind of Endianness (hence to be explained in another section)?Bertrand Blanc 16:42, 18 January 2007 (UTC)

- Specifically, I have a problem with "stored 32-bit words" in the middle-Endian sub section. To me, it implies that a 32-bit integer would be stored as described, which is untrue. The meaning of "32-bit word" is vague. It seems to me that an explanation of data types needs to be pointed to so that something true can be said, such as "32- and 64-bit floating point representations" were saved as middle-Endian. As for your other question, yes, "mixed-Endian" is a synonym of "middle-Endian." After reading the description of how one can think of the 16-bit chunks as being saved big-Endian while each pair of bytes is saved little-Endian, "mixed-Endian" seems like a very apt term. Netuser500 20:05, 18 January 2007 (UTC)

- Added "some" in the description of Middle-Endian and an explanation to the history, thereby ending my angst about the inaccuracy. Netuser500 19:26, 19 January 2007 (UTC)

- Why is adding information on floating point endianness a problem? This page *needs* some explanation about floating point. Fresheneesz 01:04, 7 May 2007 (UTC)

Could anyone clarify *which* compiler put 32-bit words that way? The V6 compiler for one doesn't because it doesn't have support for 32-bit values ... --79.240.210.243 (talk) 19:40, 21 February 2011 (UTC)

Removed section

I was BOLD and removed the "Determining the byte order" section, on grounds that Wikipedia is not a how-to guide or a code repository. It didn't provide any immediate insight in the article topic, nor was it subject of the article itself, so I think coding exercises like this should be avoided in an encyclopedia. 84.129.152.43 11:51, 7 July 2007 (UTC)

Not just a coordinate system direction

A section was added to the article containing this:

- Litte and big endianess can be seen as differing in their coordinate system's orientation. Big endianess's atomic units and memory coordinate system increases to the right while litte endianess's increases to the left. The following chart illustrates this:

Unfortunately, this is misleading. Endianness is not simply a convention about how to write down the sequence of memory addresses ("coordinates"). The essential difference between big- and little-endianness is that in the former character and binary values go in the same direction, and in the latter they go in opposite directions.

As an example, consider the (hexadecimal) binary value 0x12345678 and the ASCII/UTF-8 character string value "XRAY". Using conventional coordinate display, with indicies increasing L-to-R, we have:

system: Big-endian Little-endian coordinates: 0 1 2 3 0 1 2 3 16-bit Binary(hex): 12345678 78563412 UTF-8 Character: X R A Y X R A Y

One can also display both systems with the coordinates running in the opposite order:

system: Big-endian Little-endian coordinates: 3 2 1 0 3 2 1 0 16-bit Binary(hex): 78563412 12345678 UTF-8 Character: Y A R X Y A R X

Both of these displays correctly represent the same two endian situations that actually exist in real computer memories; they are just alternative views of those two endianness systems. The endian difference is real: you can see that in Big-, the conventional L-to-R order of binary and character run in the same directions (L-to-R above, R-to-L below), while in Little- the two orders run in opposite directions.

Since the added section incorrectly says that the two systems simply differ in display convention, it misleads the reader, and so I have removed it. -R. S. Shaw 18:55, 24 August 2007 (UTC)

Reply: It seems that my diagram was not understood correctly: Die diagram was meant as a help to map a memory access (very concrete i.e. "write 32 bit to address 0") from registers to the memory location. Maybe the descriptive text did not clarify this. It is a concrete _method_ not an abstract definition.

Endianness does not apply to character strings. "abcd" will always be stored with 'a' at the lowest address and 'd' at the highest, independent of the machine's endianness. 24.85.180.193 (talk) 10:15, 12 January 2014 (UTC)

Endianness does indeed apply to character strings. Redefining a term to get around an inconsistency is to be reprehended. On many occasions I have loaded whole words into registers (32- or 64-bit, depending on the machine) to compare quickly, several characters at a time. On big-endian platforms this works directly. Often it gains no advantage at all on little-endian platforms. The fact that text is always big-endian on all platforms is just the point of this section. Ricercar a6 (talk) 23:04, 22 October 2014 (UTC)

too strong: no standard for transferring floating point values has been made

This is too strong:

no standard for transferring floating point values has been made.

While it is true that IEEE 754-1985 doesn't pin down endianness various means of exchange have been adopted. E.g., http://www.faqs.org/rfcs/rfc1832.html and the c99 %a format provides a means of exchanging data in ASCII. --Jake 18:10, 10 September 2007 (UTC)

Bias?

This article seems to prefer the big endian notation. The diagrams make it seem like a natural choice, however if the diagrams had the order changed from left to right to right to left then the reverse would be true. Given that little endian is the dominant choice for a home computer (x86), does it not make sense that all of the diagrams and text should prefer it? I can appreciate that left to right reading order is the most natural choice for listing the addresses... so it might be confusing to address things in reverse.

Thoughts anyone?

- Jheriko (talk) 16:46, 5 February 2008 (UTC)

- The article should be unbiased, and it seems to me it does fairly well in that respect. The first diagram and the right-hand diagrams don't fit your comment, so you must be talking about the "in-line" illustrations on the left. First note, though, that two of them do have addresses increasing to the left; the other five use the conventional increasing-to-the-right format. The main point is that the left-the-right and top-to-bottom display are the normal way of displaying memory in increasing address order. This is well established in all left-to-right language-cultures (e.g. English). Thus even exclusively-little-endian users expect to see memory displayed in this way. (If it were not, character strings like "XRAY" would show up as "YARX"). It would be a mistake to have the article violate this basic assumption of the reader. -R. S. Shaw (talk) 21:10, 5 February 2008 (UTC)

- The section arguing that language writing orders can make little-endian seem as natural as big-endian are flawed and (I suspect) biased toward making little-endian seem as natural as big-endian. The problem being that big-endian is also in bit order. Little-endian has the weight of each byte in one direction, while the weight of the bits within each byte are in the exact opposite direction. I'm unaware of any natural language that writes things in this way. 68.99.134.118 (talk) 04:59, 10 December 2008 (UTC)

- Don't be absurd. Bits also have endianness; not all bit numbers are in whichever direction you're thinking -- bit 0 (or 1 sometimes) can be either MSB or LSB, depending on which convention you're using. Whether they agree with byte numbering is something else again. But what's that to do with writing systems that don't have such a level? Dicklyon (talk) 07:56, 10 December 2008 (UTC)

- The examples shown are for current architectures, wherein the bits are in MSB->LSB order from left to right. Unless you're talking about bit strings, which don't appear to be covered by endianness, nor even implied by endian notations. Of course, some current examples of bit endianness at the hardware level would certainly be helpful and would most assuredly bolster your argument. Perhaps an increment opcode that changes 0100_0000 to 1100_0000 68.99.134.118 (talk) 09:43, 18 January 2009 (UTC)

- A good example of bit endianness is the serial port on a PCs. According to the following from page RS-232:

which shows the signal to be a conversion of an 8 bit number to a 1 bit stream in little endian format. —Preceding unsigned comment added by Wildplum69 (talk • contribs) 11:02, 18 January 2009 (UTC)

which shows the signal to be a conversion of an 8 bit number to a 1 bit stream in little endian format. —Preceding unsigned comment added by Wildplum69 (talk • contribs) 11:02, 18 January 2009 (UTC)

- A good example of bit endianness is the serial port on a PCs. According to the following from page RS-232:

Sorting

There is no mention of the sorting of aggregate data, a big advantage for big endian order. Given a sort key of arbitrary length, with big endian non-negative numbers and ASCII data, one can ignore the structure of the key. With little endian numbers, one has to know where the numbers are, or more likely convert the binary numbers to some big endian format first. Jlittlenz (talk) 00:08, 16 May 2008 (UTC)

oeps

Well known processor architectures that use the little-endian format include ...

This seems incorrect; intel x86 is little-endian, networks and those named there are big-endian.

80.79.35.18 (talk) 12:23, 6 November 2008 (UTC)

telephone numbers

It has to be true that at least lookup of the destination and most likely some routing can take place before the complete number has dialled. Otherwise it would not be possible to deal with the varying length of telephone numbers. Plugwash (talk) 02:41, 27 November 2008 (UTC)

- We don't want what "must be true"... we want a citation for facts.

- FWIW, I could certainly design a system where what you describe wasn't true, but dialing worked the same, if that were explicitly required. I don't doubt incremental routing occurs, but it's not logically required by the variable-length form of numbers.LotLE×talk 07:49, 27 November 2008 (UTC)

- Here are some sources to look at. I agree that this needs to be sourced; the Strowger switches routed incrementally, in steps of one or two digits, but I'm not sure that there's any reason to connect that to the idea of "endianness". Basically, it's just a modern re-interpretation of early digits in a number as being "more significant", but that seems like a stretch, since a phone number is not a "number" in the usual sense; it wouldn't make sense to communicate it in any other way, such as binary. Dicklyon (talk) 16:11, 27 November 2008 (UTC)

phone numbers and addresses don't belong

Hello, all. I really don't think anything except for binary strings should be mentioned in this article, except as analogy. For example, the MM/DD/YY format is not really "middle-endian" in the (at all) usual sense of the word, although I of course see the conceptual similarity. Comments? 65.183.135.231 (talk) 02:42, 17 December 2008 (UTC)

- The "Other meanings" section is quite clear that such usage is nonstandard and colloquial. The Analogy section is just trying to give an intuitive impression - it could perhaps use some clarification as well. Dcoetzee 04:16, 17 December 2008 (UTC)

"BYTE" is just a convention, and other bits

The fact that a byte is eight bits and is a relatively universal unit of information is simply a defacto standard, albeit a widely observed one--not a God-given element of the universe and certainly not the "correct" way to process information. This has several implications for this article:

This article (correctly) does not limit endianness to only the type which differentiates Motorola and Intel. Some commenters seem to think they know exactly what endianness is, and that it is limited to that type. That is foolishness.

Bytes are not terribly useful as a means to store and operate on floating point numbers. Thus, Motorola Big Endians tend to ignore them when they wax dogmatic. In fact, bytes aren't terrible useful for integers or even characters either, but we get along with our shorts, longs, and unicode.

A good example of a processor that would have been horribly complicated using big endian ordering was the TI34010 GSP. It allowed addressing and operating on "pixels," each of a somewhat arbitrary number of bits. After I looked at this processor, it was quite apparent to me that big endianness is goofy. (The same kind of goofiness found in the PDP11.)

And finally, none of this discussion of endianness means anything except in the context of other conventions. These include the correspondence of numbers and collections of bits in a given processor architecture including the definitions of arithmetic, sorting, etc.; and how numbers are displayed and interpretted in user interfaces, etc. This relationship is noted in the article, but no reference is made to the fact that endianness cannot be resolved without (at least implicitly) recognizing and reconciling ALL of these conventions.

Michael Layton 2009-01-12 —Preceding unsigned comment added by 67.128.143.3 (talk) 20:40, 12 January 2009 (UTC)

Yes I agree if people would just see things in terms of bits, everyone would see how goofy Big Endian is. Try doing an arbitrary-size bit vector or "bignum" arithmetic on bits in Big Endian, and you'll see how confusing it is. —Preceding unsigned comment added by 72.196.244.178 (talk) 22:50, 6 July 2009 (UTC)

"Clarifying analogy" section misleading

The 'Clarifying analogy' section is misleading, in my opinion. Little endian or big endian are essentially about representing a number as a 'sequence' of digits, where there are two ordering of the digits: the order in the sequence (from the first to the last) and the order of ranks (decimal or whatever) from the units to the ...zillions. On the other hand, the 'clarifying analogy' gives an exemple of fragmenting the word 'SONAR' and transmitting it as 'AR' followed by 'SON', which no sound protocol will normally do. A better example would be if the number one hundread and thirty-seven should be written as 137 (as we usually do, big-endian) or 731 (as a little-endian would do, probably accompanied with pronouncing it 'seven and thirty and one hundread'). --Rlupsa (talk) 14:47, 1 April 2009 (UTC)

- The problem I have with the SONAR example and the proposed 137 example is that even though both are correct and have some value as an example, they could be improved upon. I find that the best examples are ones that illustrate real examples that one might typically encounter. Neither of the suggestions are of this nature.--Wildplum69 (talk) 07:36, 2 April 2009 (UTC)

- Can we just delete the section? A reasonable person who knew nothing of the technical side and only read that section would logically conclude "Big endian = the natural and obvious way to do things, and little endian = idiocy." I suspect that's exactly what the author of that section wanted to convey. But it's not appropriate for Wikipedia. If we must have a "clarifying analogy" it would be nice if it weren't cooked to favour one side of the issue so heavily. 216.59.249.45 (talk) 02:05, 3 June 2009 (UTC)

Where can I get more information on Atomic Elements?

When I first read this article, I was confused why the examples only showed atomic-element sizes as 8 and 16-bit, because I thought that it should have something to do with how many bits your processor is (ie, 32, 64). It would be helpful if it would be made clear that atomic element size != register size != memory addressability.

But I am curious, for a regular 32-bit intel machine, how can I figure out what the atomic element size is? What determines this? Gdw2 (talk) 18:30, 24 June 2009 (UTC)

- After anyone figures that out, please tweak this article to make it less confusing, OK?

- Do we need more prominent links to articles that go into more detail on memory addressability, register size, etc.?

- For the purposes of this article, the atomic element is the

char, the the smallest addressable unit of memory. (Other articles may have smaller atomic elements -- bit -- or larger atomic elements -- atomic (computer science)#Primitive atomic instructions).

char== smallest addressable unit of memory ==CHAR_BITbits. (For 36-bit machines,CHAR_BITis 9 bits. For Intel 8-bit, 16-bit, 32-bit, and 64-bit machines,CHAR_BITis 8 bits, one octet. Etc.). See byte#Common uses for more details.- register size == the size of data registers == how many bits your processor is. (36-bit machines have 36 bits in each data register. 16-bit machines have 16 bits in each data register. Etc.). See memory address#Unit of address resolution article, the Word (computer architecture) article, and its sub-articles 8-bit, 16-bit, 24-bit, the surprisingly popular 36-bit, etc.

- Usually the register size is bigger than one char. (But not always -- I'll get back to this later).

- So most CPUs have hardware to break the data in a register into char-sized pieces and store it into several units of memory, and also hardware to re-assemble data from several units of memory to fill up a register.

- The entire point of Endianness is that there is more than one way to do that breaking-apart and re-assembly, and all too often people are comfortable and familiar with doing it any one of those ways see all other ways as "silly", "upside-down", "goofy", etc., and vice-versa.

- There are 3 internally-consistent ways of doing that breaking-apart and re-assembly, leading to 4 kinds of devices:

- hard-wired little-endian devices, which look goofy to people comfortable with big-endian

- hard-wired Big-endian devices, which look goofy to people comfortable with little-endian

- bi-endian devices, that can be configured to act either way, designed by people who realize that if they hard-wired their device to either endian, people comfortable with the other endian would irrationally call it "goofy" and refuse to buy it.

- devices like the Analog Devices SHARC, the Apollo Guidance Computer, most PIC microcontrollers, etc. where the register size is exactly equal to the smallest addressable unit of memory. This category is woefully neglected by this article.

- How can we fix this article to give an appropriate amount of coverage to all 4 kinds of devices? --DavidCary (talk) 20:28, 25 January 2013 (UTC)

Origin of the term?

Presumably, at some time in the past, something like following series of events occured:

1) Some computers were developed using one form.

2) Some computers were developed using the other form.

3) Fighting broke out.

4) Someone sarcastically suggested using "big endian" and "little endian" as a commentary on how pointless it all was.

Who was that person? When did they coin the term?

Thanks! - Richfife (talk) 23:17, 17 July 2009 (UTC)

- Danny Cohen of USC's Information Science Institute, almost 30 years ago, whose "On Holy Wars and a Plea for Peace" is listed as the first item under Further reading. Read it - it's better than this article. RossPatterson (talk) 02:48, 22 July 2009 (UTC)

Good example of a bad definition

Quoting the definition (buried in the top text after some useless talk) "The usual contrast is between most versus least significant byte first, called big-endian and little-endian respectively."

The bad news is What is significant byte first? Kill the respectively!

Here's what I suggest.

Big-endian means most significant byte first; in left to right ordering in hex word AABBCC,the first byte is AA and the most significant so it's big-endian

Little endian means least significant byte first; in left to right ordering in hex word AABBCC,the first byte is AA and the least significant so it's little-endian. —Preceding unsigned comment added by 128.206.20.54 (talk) 18:32, 10 December 2009 (UTC)

Misrepresented

Big Endian would probably be better exemplified by illustrating the arrows beginning from the furthest left side -- the A in the diagram. So, in the second illustration from the top, you would have the arrow beginning from A and proceeding through B. The third illustration could show just A for Big Endian. Big Endianness would be able to process the higher order bytes (words/dwords/qwords) by default, NOT the low order bytes first as illustrated in the lower portion of the diagram on the right.

The lower portion of the diagram should look something like:

D | A

C D | A B

A B C D | A B C D

Once the first byte, word, or double-word portions of their wholes have been encountered, then you can get to the next byte, word, or double-word down the line within the defined data type. So on the top row above here, for little-endian, C-byte cannot be reached until D-byte has been passed over, B-byte not until D-byte, and A-byte not until B-byte. On the big-endian side is the inverse. B-byte is reached by passing A-byte first, C-byte through B-byte, then D-byte through C-byte. In the second row, word AB on the little-endian side is not reached until you pass over the low-order word CD and on the big endian side, CD, the low-order word cannot be accessed until the high-ordered AB is passed. Although location orders may not technically be before or after another, they are considered ordered by how you proceed when writing programs. So the way I've shown it best explains it.

--Scott Mayers (talk) 02:33, 3 July 2010 (UTC)

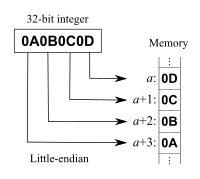

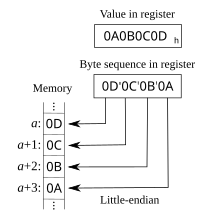

- The diagram visualizes the register<->memory relationship (writing/reading a value from/to register from/to

- memory). If you have a register that has the value 0x0a0b0c0d you will end up with

D | D

C D | C D

A B C D | A B C D

- To write the 0xa from the register you'd need something like:

*(char*)p = (a>>24)

- Therefore it makes perfect sense to have "d" for byte accesses. and "c d" for 16-bit accesses. It is how hardware function. — Preceding unsigned comment added by Eiselekd (talk • contribs) 11:19, 9 June 2011 (UTC)

bad redirect

Least Significant Digit redirects here, but this page fails to explain what it means. —Preceding unsigned comment added by 130.155.189.102 (talk • contribs)

- Changed to go to Significant figures#Least significant -R. S. Shaw (talk) 00:26, 22 July 2010 (UTC)

Homeopathy?

I have no idea why this section is in the Etymology section:

"The allegory has been used in other contexts, such as the 1874 article, "Why are not All Physicians Homeopathists?":

"In the eyes of the law and of public opinion Homoeopathy and Homoeopathic physicians are just as much to be respected as Allopathy and Allopathic physicians. The public cares nothing for our theories or our squabbles. It regards our contentions as quite as absurd as the war between the big-endians and the little-endians, the two great parties in Dean Swift's story, which convulsed the nation with the question whether an egg should be broken at its big or its little end. The public estimates men by their attainments and their conduct, and medical practice by its failure or its success. It instinctively and sensibly denounces as bigotry and persecution any act of intolerance of one school towards another.""

It doesn't seem at all relevant to this article or to the etymology of the term as it relates to this article. 198.204.141.208 (talk) 16:48, 12 January 2011 (UTC)

- I agree. I've removed it. -R. S. Shaw (talk) 08:27, 13 January 2011 (UTC)

Missing "advantages" section

Clearly, where there are two competing approaches (big-endian and little-endian), and neither of them died out, each one probably has its own advantages, and I think people coming to this article will be curious to compare the two formats in this respect. Here is what I came up with:

- On little-endian machines, if a small number is written in some address (and padded by zeros), reading it as 16-bit, 32-bit or 64-bit will return the same value. This is often convenient in code which needs to work for several integer lengths. This advantage is mentioned in the article.

- The big-endian approach is more natural for debugging printouts. When you look at 4 bytes of memory and see AE 23 FF 56, the 32-bit number stored there is 0xAE23FF56 as expected (if it was little endian, the number would have been the reverse - 0x56FF23AE). This advantage doesn't appear to be mentioned in the article.

I wonder if there are other advantages of both approaches worth comparing. Nyh (talk) 09:25, 6 February 2011 (UTC)

- I would also be interested in more advantages of each ("Optimization" is pretty close to an "Advantages" section, but it's short). I wonder how much content there is for such a section, though. Endianness is a canonical example of a trivial standards problem, where neither alternative seems to have much intrinsic value. 67.158.43.41 (talk) 21:51, 7 February 2011 (UTC)

Messy Article - needs major cleanup

Sorry guys, I thinks this is one of the articles on Wikipedia, which is very messy. It's not really easily understandable what is written about. —Preceding unsigned comment added by 84.163.141.244 (talk) 00:12, 13 February 2011 (UTC)

Added: Quick Reference - Byte Machine Example

The most common meaning of big vs little endianness is buried in the text. For some people, this is the best way to communicate it. Others (like myself) prefer a quick summary.

Hence I added a quick summary table in the introduction. It is completely redundant, but I believe it improves the article for those who want a quick reference without diving into the text. Gustnado : ►Talk 18:57, 19 March 2011 (UTC)

Endianness in natural languages

From the history section:

Spoken languages have a wide variety of organizations of numbers: the decimal number 92 is/was spoken in English as ninety-two, in German and Dutch as two and ninety and in French as four-twenty-twelve with a similar system in Danish (two-and-four-and-a-half-times-twenty).

This is quite misleading, as it gives the impression that German, Dutch and Danish are little-endian. It would be more accurate to describe them as “mixed-endian”. For instance, the number 1,234,567,890 is written “en milliard to hundrede og fir'ogtredve millioner fem hundrede og syvogtres tusind otte hundrede og halvfems” (“one billion two hundred and four-and-thirty million five hundred and seven-and-sixty thousand eight hundred and ninety”) in Danish. The words “tres” (for 60) and “halvfems” (for 90) are abbreviations of “tresindstyve” and “halvfemtesindstyve” respectively, which translate literally to “three times twenty” and “half-fifth times twenty”. Note that 50 is “halvtreds”, for “halvtredjesindstyve” (“half-third times twenty”). The forms “halvtredje” (“half-third”, 2½), “halvfjerde” (“half-fourth”, 3½) and “halvfemte” (“half-fifth”, 4½) are only used in this context, while “halvanden” (“half-second”, 1½) is only used to mean “one-and-a-half”; there is no “halvandensindstyve” for 30 (or, for that matter, “tosindstyve”, which would be the corresponding word for 40).

Norwegian previously used the Danish order, but without the base-twenty system Danish uses between 50 and 99. Officially, Norwegian went entirely big-endian in 1950 when the state-owned telecom monopoly successfully petitioned parliament to mandate big-endianness so switchboard operators could connect calls faster, but the “Danish model” survives in conservative sociolects such as mine, even among people (such as myself) who were born long after the reform. See Enogseksti eller sekstien (in Norwegian) for further details on the transition. In standard Norwegian (to the extent that such a language exists), 1,234,567,890 is written “en milliard to hundre og trettifire millioner fem hundre og sekstisyv tusen åtte hundre og nitti” (“one billion two hundred and thirtyfour million five hundred and sixtyseven thousand eight hundred and ninety”). Note the substitution of “tretti” for the Danish “tredve”, and (not included in this example) “tjue” for “tyve”. The Norwegianized form “halvannen” of the Danish “halvanden” for 1½ is still widely used.

The statement about French is also misleading, because the words “septante”, “octante” (or “huitante”) and “nonante” are used instead of “soixante-dix”, “quatre-vingts” and “quatre-vingt-dix” in some parts of France as well as in some (most?) other French-speaking countries.

DES (talk) 14:32, 23 March 2011 (UTC)

Endianness of numbers as embedded in Right-to-Left writing systems

Quoting the article: " However, numbers are written almost universally in the Hindu-Arabic numeral system, in which the most significant digits are written first in languages written left-to-right, and last in languages written right-to-left."

I'm only an amateur linguist, but I think this needs elaboration. Rendering of text containing both LtoR and RtoL writing systems (especially since Unicode (BiDi) specified it carefully) involves changes of direction between LtoR and RtoL. Hindu-Arabic numbers are, as far as I know, always rendered LtoR as in English and European languages (among other LtoR languages). When rendering numbers embedded in an RtoL writing system, it's quite likely that a code specifying the next bytes (the number) as LtoR precedes the number bytes themselves. In turn, the number is followed by a code specifying RtoL. While the original statement seems to stem from an assumption that numbers are rendered in the same direction as the text in an RtoL writing system, that's likely to be a conclusion drawn from a reasonable, but uninformed assumption.

I haven't changed the text, because I'm not a serious (nor well-informed) scholar on the topic.

It's instructive to place the text specimen in a BiDi-compatible text editor or word processor and step the cursor through the text shortly before the number, through the number itself, and continue further into the text. Note how the directions of the step movements change.

With this convention, there's no need to reverse digit sequence in the text when a number is to be rendered in an RtoL language. Unicode documents (especially concerning BiDi, (the BiDirectional algorithm)) discuss this.

Essentially irrelevant, but it's curious to see popular usage among the poorly-educated, who place the dollar sign as a suffix, and the percent sign as a prefix. The latter is correct in Arabic, for instance: ٪١٠٠, which is "100%" using Arabic characters (rendered here in LtoR sequence, though.

Regards, Nikevich (talk) 08:29, 29 April 2011 (UTC)

- I regret to inform you that the specifics of the UNICODE BiDi algorithm is a mostly irrelevant technical detail designed to allow software designed for LtoR languages such as English to more easily port to RtoL languages such as Arabic and Hebrew.

- Much more relevant is how text and numbers are rendered by Human beings writing directly with a pen or stylus on paper, papyrus, clay etc. In the RtoL language from which the current "Arabic numerals" were imported, digits are written in little-endian order thus facilitating addition, subtraction etc. When this notation was imported into LtoR languages such as Latin and Italian, the graphical position of the digits were retained even though writers of these languages generally work left to right, thus causing these humans to usually render numbers in big-endian order. I will leave obtaining solid references for this to others. 90.184.187.71 (talk) 18:52, 21 June 2011 (UTC)

- Perhaps this should be clarified within the text itself? Because as someone unfamiliar with right-to-left writing (except insofar as I practice mirror writing; But this has nothing to do with actual languages), the existing paragraph in the article is highly confusing because it is in no way obvious that RtoL languages essentially write numbers in the opposite order to LtoR ones. (Even though they use the same order seen in isolation in a page, in context of a written text as a whole, numbers remaining in the same order even though text as a whole is written in the opposite direction on the page implies the numbers are in a reversed order compared to LtoR languages).

- Since this is an English wikipedia page, it is to be expected that the only thing you can rely upon the readers understanding is English, and since English is written Left to Right, this remark only serves to cause confusion about how number systems work in languages that write right to left. — Preceding unsigned comment added by 80.0.32.169 (talk) 19:27, 5 November 2011 (UTC)

Removal of 'Endianessmap.svg'

The diagram seems to be somehwat confusing and is presently the source of two comments which are part of the discussion for this article. Can the diagram be removed without compromising the quality of the article? The examples which immediately follow the diagram seem to clarify the concept...

- Yes! 83.255.35.104 (talk) 04:12, 13 April 2011 (UTC)

- Take en example: You might have a c-code line:

int a = 0x0a0b0c0d, *p; p = &a;

- Lets say that address of &a in p is 0. Now you want to visualize why in big-endian an 16 bit (short) access

*(short *)p

- will result in 0x0a0b being returned while in little-endian:

*(short *)p

- will return 0x0c0d.

- Try it once and you will see that it is practical. (Note that you read from memory, therefore you need to take 2 bytes from the bottom memory part of the diagram, if you would write to memory then its the opposite).

- For a non programmer it might not be understandable. But for a system programmer it makes sense. And because the endianess word is primary used in system programming I vote for

- leaving the diagram in. — Preceding unsigned comment added by Eiselekd (talk • contribs) 11:06, 9 June 2011 (UTC)

- Does your diagram add anything that isn’t already there? It seems to me that there is already a lot of example and diagrams in the article, and often just adding more diagrams to an already confusing subject doesn’t help. For instance can’t you apply the Examples storing 0A0B0C0Dh section to your C code example? Vadmium (talk) 15:01, 9 June 2011 (UTC).

- Search the history of the article once http://en.wikipedia.org/w/index.php?title=Endianness&oldid=162226184 is the old version of the Examples storing 0A0B0C0D in memory sub-section. Next my diagram http://en.wikipedia.org/wiki/File:Endianessmap.svg was added: http://en.wikipedia.org/w/index.php?title=Endianness&oldid=162268429 . The next thing that happened was that 3 weeks later in the section Examples storing 0A0B0C0D in memory the register->memory diagrams where added at the right side: http://en.wikipedia.org/w/index.php?title=Endianness&oldid=167157677 ( http://en.wikipedia.org/wiki/File:Big-Endian.svg and http://en.wikipedia.org/wiki/File:Little-Endian.svg ) If you try to understand them once you'll see that they are based on http://en.wikipedia.org/wiki/File:Endianessmap.svg as for they are visualizing exactly the same. The difference is that the "memory" in the 2 diagrams is vertically tilted.

- So I guess that http://en.wikipedia.org/wiki/File:Endianessmap.svg is the more basic and generalized version and therefore if one cares about making things compact (my diagram is constantly removed) should instead see how the following Examples storing 0A0B0C0D in memory section should be shortened. — Preceding unsigned comment added by 83.233.131.177 (talk) 14:56, 10 June 2011 (UTC)

- I already understand endianness pretty thoroughly so I don’t think I have the freshest point of view. Perhaps if you want to improve the diagram it would be helpful for others to point out what is confusing. In #Not just a coordinate system direction above the complaint is that the diagram gives the impression that endianness is just about which direction you write memory addresses. Maybe the diagram could be presented with another diagram having both endian formats stored in left-to-right memory. In #Misrepresented, I don’t agree with the suggestion of storing the more-significant bytes in 8 and 16-bit accesses. But maybe this highlights the need to explain that a typical 32-bit processor would ignore the more-significant bytes, or do a zero or sign extension for 8 and 16-bit memory accesses. Vadmium (talk) 15:01, 9 June 2011 (UTC).

- The problem I think lies in which way you come from. If you try the only grasp the concept of "endianess" then you're better off with a high level explanation. However the diagram in question is understandable from a practical point of view: I deliberately called my diagram "Method of mapping registers to memory locations" because it is something that is applicable. Lets give an example: You have an ip packet and you want to process a 16 bit part of it. You know the offset. Now you want to understand why on a little-endian machine you get a certain value in your registers when accessing 16 bits. What you then do (in your brain) is that you visualize in your mind the memory and the register. When you visualize the memory you are used to the cartesian coordinate system growing to the right. When visualizing the registers you are also used to use the cartesian coordinate system growing to the right. However then on a little endian system you will not get in your mind the same result as shown in your debugger in reality. To fill the gap you need to swap the bytes in mind for each step, without really understanding why, like an abstract operation. However when you use a coordinate system that grows to the left then your mind mapping will be in sync with the result that you observe in reality (register view of your debugger).

- The crucial part in the steps above is well that somebody that doesnt go through this practice will not understand the the sense in it and write #Not just a coordinate system direction.

Removal of 'Endianessmap.svg' by 83.255.34.193

I addmit. The addition of the c-example code in the subsection Diagram for mapping registers to memory locations was a bit overdriven and might be moved to the discussion section (http://en.wikipedia.org/w/index.php?title=Endianness&oldid=433610778). It is a reaction against 83.255.34.193 's removal comment:

That little table is very very cute, but totally misleading... A little endian machine does NOT store text backwards in memory - little endianess is about storing the individually addressable parts of an INTEGER in carry-propagation order.

and also #Misrepresented that wants a certain order in the bytes shown. They always claim "what is", it is like this, it is like that. Now you cannot argue against c-code that is running on a real machine. I would say that this is "what is".

I'd like to ask to review the end paragraph from the cut out section below:

In the 2 outputs from x86 and SPARC, the symmetry of the output (that is: the register printout equals the memory printout) is only achieved by having the coordinate system of register and memory grow to the left on Little-endian x86 and grow to the right on Big-endian SPARC. Normally one is used to the Cartesian coordinate system when visualizing both memory and registers. For a Little-endian x86 program this leads to a disturbing disparity between the output observed on a system and the expected result - a counter intuitive swap operation has to be inserted.

My question: Does anyone understand the paragraph? Didnt enyone from you work in system programming and struggle to understand the byte-swapping nature of the x86 output of a program? Now you might ask "why system programming", well I guess that Endianess is a system programming concept primary.

Another comment on a comment:

Please! "Cartesian coordinate system"...? Come on... Do you really belive that ANYONE that hasn't already grasped the simple concept of endianess would benefit from that extremely far-fetched and overloaded "explanation" and C-code...

I wonder why 83.255.34.193 thinks that there is no coordiante system involved: [[3]] grows Cartesian from left to right. The units are individual bits. [[4]] grows Cartesian from left to right. The units are the MII nibbles. You are used to carthesian because westerners are writing from left to right. If you want to printout the content of memory you are writing out from left to write. However the little-endian system is opposite to Cartesian in nature and grows from right to left. Otherwise you need to byteswap the ouput. — Preceding unsigned comment added by 83.233.131.177 (talk) 06:53, 11 June 2011 (UTC)

Here is the c-code that I cut out of the main article again:

Life example on a Little-endian x86 machine. The printout is done with addresses (coordinate system) growing to left [ ... 3 2 1 0 ]:

#include <stdio.h>

/* x86 */

main () {

unsigned int ra = 0x0a0b0c0d;

unsigned char *rp = (char *)&ra;

unsigned int wa = 0x0a0b0c0d, wd = 0;

unsigned char *wp = (char *)&wd;

printf("(Memory address growing left) 3 2 1 0 \n");

/* x86: store 8 bits of <wa(0x0a0b0c[0d])> at address <wp(=&wd)> */

__asm__ __volatile__("movb %1,(%0)\n\t" : : "a" ((unsigned char *)wp), "r" ((unsigned char)wa) );

/* x86: show with coordinates growing to left: ... 3 2 1 0|*/

printf("register(0x0a0b0c[0d])->memory 8 bit: %02x\n", wp[0]);

/* x86: store 16 bits of <wa(0x0a0[b0c0d])> at address <wp(=&wd)> */

__asm__ __volatile__("movw %1,(%0)\n\t" : : "a" ((unsigned short *)wp), "r" ((unsigned short)wa) );

/* x86: show with coordinates growing to left: ... 3 2 1 0|*/

printf("register(0x0a0b[0c0d])->memory 16 bit: %02x %02x\n", wp[1], wp[0]);

/* x86: store 32 bits of <wa(0x[0a0b0c0d])> at address <wp(=&wd)> */

__asm__ __volatile__("movl %1,(%0)\n\t" : : "a" ((unsigned int *)wp), "r" ((unsigned int)wa) );

/* x86: show with coordinates growing to left: ... 3 2 1 0| */

printf("register(0x[0a0b0c0d])->memory 32 bit: %02x %02x %02x %02x\n", wp[3], wp[2], wp[1], wp[0]);

printf(" 3 2 1 0 (Memory address growing left)\n");

printf("memory( 0a 0b 0c[0d])->register 8 bit: %02x\n", rp[0]);

printf("memory( 0a 0b[0c 0d])->register 16 bit: %04x\n", *(unsigned short *)rp);

printf("memory([0a 0b 0c 0d])->register 32 bit: %08x\n", *(unsigned int *)rp);

}

The output of the program started on a x86 machine is:

(Memory address growing left) 3 2 1 0

register(0x0a0b0c[0d])->memory 8 bit: 0d

register(0x0a0b[0c0d])->memory 16 bit: 0c 0d

register(0x[0a0b0c0d])->memory 32 bit: 0a 0b 0c 0d

3 2 1 0 (Memory address growing left)

memory( 0a 0b 0c[0d])->register 8 bit: 0d

memory( 0a 0b[0c 0d])->register 16 bit: 0c0d

memory([0a 0b 0c 0d])->register 32 bit: 0a0b0c0d

Life example on a Big-endian SPARC machine. The printout is done with addresses (coordinate system) growing to right [ 0 1 2 3 ... ]:

/* sparc */

main () {

unsigned int ra = 0x0a0b0c0d;

unsigned char *rp = (char *)&ra;

unsigned int wa = 0x0a0b0c0d, wd;

unsigned char *wp = (char *)&wd;

printf("(Memory address growing right) 0 1 2 3 \n");

/* sparc: store 8 bits of <wa(0x0a0b0c[0d])> at address <wp(=&wd)> */

__asm__ __volatile__("stub %1,[%0]\n\t" : : "r" (wp), "r" (wa) );

/* sparc: show with coordinates growing to right: | 0 1 2 3 ... */

printf("register(0x0a0b0c[0d])->memory 8 bit: %02x\n", wp[0]);

/* sparc: store 16 bits of <wa(0x0a0b[0c0d])> at address <wp(=&wd)> */

__asm__ __volatile__("sth %1,[%0]\n\t" : : "r" (wp), "r" (wa) );

/* sparc: show with coordinates growing to right: | 0 1 2 3 ... */

printf("register(0x0a0b[0c0d])->memory 16 bit: %02x %02x\n", wp[0], wp[1]);

/* sparc: store 32 bits of <wa(0x[0a0b0c0d])> at address <wp(=&wd)> */

__asm__ __volatile__("st %1,[%0]\n\t" : : "r" (wp), "r" (wa) );

/* sparc: show with coordinates growing to right: | 0 1 2 3 ... */

printf("register(0x[0a0b0c0d])->memory 32 bit: %02x %02x %02x %02x\n", wp[0], wp[1], wp[2], wp[3]);

printf(" 0 1 2 3 (Memory address growing right)\n");

printf("memory([0a]0b 0c 0d )->register 8 bit: %02x\n", rp[0]);

printf("memory([0a 0b]0c 0d )->register 16 bit: %04x\n", *(unsigned short *)rp);

printf("memory([0a 0b 0c 0d])->register 32 bit: %08x\n", *(unsigned int *)rp);

}

The output of the program started on a SPARC machine is:

(Memory address growing right) 0 1 2 3

register(0x0a0b0c[0d])->memory 8 bit: 0d

register(0x0a0b[0c0d])->memory 16 bit: 0c 0d

register(0x[0a0b0c0d])->memory 32 bit: 0a 0b 0c 0d

0 1 2 3 (Memory address growing right)

memory([0a]0b 0c 0d )->register 8 bit: 0a

memory([0a 0b]0c 0d )->register 16 bit: 0a0b

memory([0a 0b 0c 0d])->register 32 bit: 0a0b0c0d